3 一般性优化原则¶

本文更新于 2018.12.01

参考: https://blog.csdn.net/wuhui_gdnt/article/details/79042412

格式说明:

- 重点, 需理解

- 次要重点

- 还未理解的内容

- zzq注解 批注,扩展阅读资料等

- note/warning等注解: 原文内的注解或自己添加的备忘信息

本章讨论可以提升运行在基于Intel微架构Haswell, IvyBridge, SandyBridge, Westmere, Nehalem, 增强Intel Core微架构与Intel Core微架构的处理器上应用程序性能的通用优化技术. 这些技术利用了在第2章”Intel 64 and IA-32 Processor Architectures””中描述的微架构. 关注多核处理器, 超线程技术与64位模式应用程序的优化指引在第8章“多核与超线程技术”, 第9章“64位模式编程指引”中描述.

优化性能的实践关注在3个领域:

- 代码生成的工具与技术.

- 工作负荷性能特征的分析, 以及它与微架构子系统的相互作用.

- 根据目标微架构(或微架构家族)调整代码以提升性能.

首先汇总工具使用的一些提示以简化前两个任务. 本章余下部分将关注在对目标微架构代码生成或代码调整的建议上. 本章解释了Intel C++编译器, Intel Fortran编译器及其他编译器的优化技术.

3.1 性能工具¶

Intel提供了几个工具来辅助优化应用程序性能, 包括编译器, 性能分析器以及多线程化工具.

3.1.1 Intel C++与Fortran编译器¶

Intel编译器支持多个操作系统(Windows*, Linux*, Mac OS*以及嵌入式).Intel编译器优化性能并向应用程序开发者提供对先进特性的访问:

面向32位或64位Intel处理器优化的灵活性.

兼容许多集成开发环境或第三方编译器.

利用目标处理器架构的自动优化特性.

减少对不同处理器编写不同代码的自动编译器优化.

支持跨Windows, Linux与Mas OS的通用编译器特性, 包括:

- 通用优化设置.

- 缓存管理特性.

- 进程间优化(Interprocedural optimization, IPO)的方法.

- 分析器指导的优化(profile-guided optimization, PGO)的方法.

- 多线程支持.

- 浮点算术浮点与一致性支持.

- 编译器优化与向量化报告.

3.1.2 一般编译器建议¶

总的来说, 对目标微架构调整好的编译器被期望能匹配或超出手动编码. 不过, 如果注意到了被编译代码的性能问题, 某些编译器(像Intel C++与Fortran编译器)允许程序员插入内置函数(intrinsics)或内联汇编对生成的代码加以控制.如果使用了内联汇编, 使用者必须核实生成的代码质量良好, 性能优先.

缺省的编译器选项定位于普通情形. 优化可能由编译器缺省做出, 如果它对大多数程序都有好处. 如果性能问题的根源是编译器部分一个差劲的选择, 使用不同的选项或以别的编译器编译器目标模块也许能解决问题.

3.1.3 VTune性能分析器¶

VTune使用性能监控硬件来收集你应用程序的统计数据与编码信息, 以及它与微架构的交互. 这允许软件工程师测量一个给定微架构工作负载的性能特征. VTune支持所有当前与以前的Intel处理器家族.

VTune性能分析器提供两种反馈:

- 由使用特定的编码建议或微架构特性所获得的性能提升的一个指示

- 以特定的指标而言, 程序中的改变提升还是恶化了性能的信息

VTune性能分析器还提供若干工作负载特征的测量, 包括:

- 作为工作负载中可获取指令级并行度指标的 吞吐率

- 作为缓存及内存层级重点指标的数据传输局部性

- 作为数据访问时延分摊(amortization)有效性指标的数据传输并行性

注解

在机器一部分中提升性能不一定对总体性能带来显著提升.提升某个特定指标的性能, 可能会降低总体性能.

在合适的地方, 在本章中的编程建议包括对VTune性能分析器事件的结果, 它提供了遵循建议所达成性能提升的可测量数据. 更多如何使用VTune分析器, 参考该应用程序的在线帮助.

3.2 处理器全景¶

许多编程建议从Intel Core微架构跨越到Haswell微架构都工作良好. 不过, 存在一个建议对某个微架构的好处超出其他微架构的情形. 其中一些建议是:

- 指令解码吞吐率是重要的.另外, 利用已解码ICache, 循环流检测器(Loop Stream Detector, LSD)以及宏融合(macro-fusion)可以进一步提升前端的性能

- 生成利用4个解码器, 并应用微融合(micro-fusion)与宏融合的代码, 使3个简单解码器中的每个都不受限于处理包含一个微操作(micro-op)的简单指令

- 在基于Sandy Bridge, IvyBridge及Haswell微架构的处理器上, 最优前端性能的代码大小与已解码ICache相关

- 寄存器部分写(partial register write)的依赖性可以导致不同程度的性能损失. 要避免来自寄存器部分更新的伪依赖性(false dependence), 使用完整的寄存器更新及扩展移动

- 使用合适的支持依赖性消除的指令(比如PXOR, SUB, XOR, XOPRS)

- 硬件预取通常可以减少数据与指令访问的实际内存时延. 不过不同的微架构可能要求某些定制的修改来适应每个微架构特定的硬件预取实现

3.2.1 CPUID发布策略与兼容代码策略¶

当希望在所有处理器世代上有最优性能时, 应用程序可以利用CPUID指令来识别处理器世代, 将处理器特定的指令集成到源代码中. Intel C++编译器支持对不同的目标处理器集成不同版本的代码. 在运行时执行哪些代码的选择在CPU识别符上做出. 面向不同处理器世代的二进制码可以在程序员或编译器的控制下生成.

zzq注解 和hyperscan中的fat runtime特性相似? 如何做到?

对面向多代微架构的应用程序, 最小的二进制代码大小以及单代码路径是重要的, 兼容代码策略是最好的. 使用为Intel Core微架构开发, 结合Intel微架构Nehalem的技术优化应用程序, 当运行在基于当前或将来Intel 64及IA-32世代的处理器上时, 有可能提升代码效率与可扩展性.

3.2.2 透明缓存参数策略¶

如果CPUID指令支持功能页(function leaf)4, 也称为确定性缓存参数页, 该页以确定性且前向兼容的方式报告缓存层级的每级缓存参数. 该页覆盖Intel 64与IA-32处理器家族.

对于依赖于缓存级(cache level)特定参数的编程技术, 使用确定性缓存参数允许软件以与未来Intel 64及IA-32处理器世代前向兼容, 且与缓存大小不同的处理器相互兼容的方式实现技术.

3.2.3 线程化策略与硬件多线程支持¶

Intel 64与IA-32处理器家族以两个形式提供硬件多线程支持: 双核技术与HT技术.

为了完全发挥在当前及将来世代的Intel 64与IA-32处理器中硬件多线程的性能潜力, 软件必须在应用程序设计中拥抱线程化的做法. 同时, 为应付最大范围的已安装机器, 多线程软件应该能够无故障地运行在没有硬件多线程支持的单处理器上, 并且在单个逻辑处理器上达成的性能应该与非线程的实现可相比较(如果这样的比较可以进行). 这通常要求将一个多线程应用程序设计为最小化线程同步的开销.关于多线程的额外指引在第8章“多核与超线程技术”中讨论.

3.3 编程规则, 建议及调整提示¶

本节包括规则, 建议与提示, 面向的工程师是:

- 修改源代码来改进性能(user/source规则)

- 编写汇编器或编译器(assembly/compiler规则)

- 进行详细的性能调优(调优建议)

编程建议使用两个尺度进行重要性分级:

- 局部影响(高, 中或低)指一个建议对给定代码实例性能的影响

- 普遍性(Generality)(高, 中或低)衡量在所有应用程序领域里这些实例的发生次数. 普遍性也可认为是“频率”

这些建议是大概的. 它们会依赖于编程风格, 应用程序域, 以及其他因素而变化.

高, 中与低(H, M与L)属性的目的是给出, 如果实现一个建议, 可以预期的性能提升的相对程度.

因为预测应用程序中一段特定代码是不可能的, 优先级提升不能直接与应用程序级性能提升相关. 在观察到应用程序级性能提升的情形下, 我们提供了该增益的定量描述(仅供参考). 在影响被认为不适用的情形下, 不分配优先级.

3.4 优化前端¶

优化前端包括两个方面:

- 维持对执行引擎稳定的微操作供应 — 误预测分支会干扰微操作流, 或导致执行引擎在非构建代码路径(non-architectedcode path)中的微操作流上浪费执行资源. 大多数这方面的调整集中在分支预测单元. 常见的技术在3.4.1节”分支预测优化”中讨论.

- 供应微操作流尽可能利用执行带宽与回收带宽 — 对于Intel Core微架构与Intel Core Duo处理器家族, 这方面集中在维持高的解码吞吐率. 在Sandy Bridge中, 这方面集中在从已解码ICache保持热代码(hod code?)运行. 最大化Intel Core微架构解码吞吐率的技术在3.4.2节”取指与解码优化”中讨论.

3.4.1 分支预测优化¶

分支优化对性能有重要的影响. 通过理解分支流并改进其可预测性, 可以显著提升代码的速度.

有助于分支预测的优化有:

- 将代码与数据保持在不同的页面.这非常重要;更多信息参考3.6节“优化内存访问”

- 尽可能消除分支

- 将代码安排得与静态分支预测算法一致

- 在自旋等待循环(spin-wait loop)中使用PAUSE指令

- 内联函数, 并使调用与返回成对

- 在需要时循环展开, 使重复执行的循环的迭代次数不多于16(除非这会导致代码的大小过度增长)

- 避免在一个循环里放置两条条件分支指令, 使得两者都有相同的目标地址, 并且同时属于(即包含它们最后字节地址在内)同一个16字节对齐的代码块

3.4.1.1 消除分支¶

消除分支提高了性能, 因为:

- 它减少了误预测的可能性

- 它减少了所要求的分支目标缓冲(Branch Target Buffer, BTB)项. 永远不会被采用的条件分支不消耗BTB资源.

消除分支有4个主要的方法:

- 安排代码使得基本块连续

- 展开循环, 就像3.4.1.7节”循环展开”讨论的那样

- 使用CMOV指令

- 使用SETCC指令

zzq注解 CMOV是条件MOV

以下规则适用于分支消除:

Assembly/Compiler编程规则1.(影响MH, 普遍性M) 安排代码使基本块连续, 并消除不必要的分支.

Assembly/Compiler编程规则2.(影响M, 普遍性ML) 使用SETCC及CMOV指令来尽可能消除不可预测条件分支. 对可预测分支不要这样做. 不要使用这些指令消除所有的不可预测条件分支(因为归咎于要求执行1个条件分支两条路径的要求, 使用这些指令将导致执行开销). 另外, 将一个条件分支转换为SETCC或CMOV是以控制流依赖交换数据依赖, 限制了乱序引擎的能力. 在调优时, 注意到所有的Intel 64与IA-32处理器通常具有非常高的分支预测率. 始终被误预测的分支通常很少见. 仅当增加的计算时间比一个误预测分支的预期代价要少, 才使用这些指令.

考虑一行条件依赖于其中一个常量的C代码:

X = (A < B) ? CONST1: CONST2;

代码有条件地比较两个值A和B.如果条件成立, 设置X为CONST1;否则设置为CONST2. 一个等效于上面C代码的汇编代码序列可以包含不可预测分支, 如果在这两个值中没有相关性.

例子3-1显示了带有不可预测分支的汇编代码. 不可预测分支可以通过使用SETCC指令移除. 例子3-2显示了没有分支的优化代码.

例子3-1. 带有一个不可预测分支的汇编代码:

cmp a, b ; Condition

jae L30 ; Conditional branch

mov ebx const1 ; ebx holds X

jmp L31 ; Unconditional branch

L30:

mov ebx, const2

L31:

例子3-2. 消除分支的优化代码:

xor ebx, ebx ; Clear ebx (X in the C code)

cmp A, B

setge bl ; When ebx = 0 or 1

; OR the complement condition

sub ebx, 1 ; ebx=11...11 or 00...00

and ebx, CONST3 ; CONST3 = CONST1-CONST2

add ebx, CONST2 ; ebx=CONST1 or CONST2

例子3-2中的优化代码将EBX设为0, 然后比较A和B.如果A不小于B, EBX被设为1. 然后递减EBX, 并与常量值的差进行AND. 这将EBX设置为0或值的差.通过将CONST2加回EBX, 正确的值写入EBX.在CONST2等于0时, 可以删除最后一条指令.

zzq注解 分析:

// setge后

if(A < B)

ebx = 0

ebx-1 = 11..11

ebx & (const1-const2) = const1-const2

ebx+const2 = const1

else

ebx = 1

ebx-1 = 00..00

ebx & (const1-const2) = 0

ebx+const2 = const2

删除分支的另一个方法是使用CMOV及FCMOV指令. 例子3-3显示了如何使用CMOV修改一个TEST及分支指令序列, 消除一个分支. 如果这个TEST设置了相等标记, EBX中的值将被移到EAX. 这个分支是数据依赖的, 是一个典型的不可预测分支.

例子3-3. 使用CMOV指令消除分支:

test ecx, 3

jne next_block

mov eax, ebx

next_block:

; To optimize code, combine jne and mov into one cmovcc instruction

; that checks the equal flag

test ecx, 3 ; Test the flags

cmove eax, ebx ; If the equal flag is set, move

; ebx to eax- the 1H: tag no longer needed

zzq注解 对应的C代码:

if(ecx == 3)

eax = eab;

3.4.1.2 spin-wait和idle loop¶

Pentium 4处理器引入了一条新的PAUSE指令;在Intel 64与IA-32处理器实现中, 在架构上, 这个指令是一个NOP.

对于Pentium 4与后续处理器, 这条指令作为代码序列是spin-wait循环的提示. 在这样的循环里没有一条PAUSE指令, 在退出这个循环时, Pentium 4处理器可能遭遇严重的性能损失, 因为处理器可能检测到一个可能的内存次序违例(memory order violation). 插入PAUSE指令显著降低了内存次序违例的可能性, 结果提升了性能.

在例子3-4中, 代码自旋直到内存位置A匹配保存在寄存器EAX的值. 在保护临界区时, 在生产者-消费者序列中, 这样的代码序列通常用于屏障(barrier)或其他同步.

例子3-4. PAUSE指令的使用:

lock: cmp eax, a

jne loop

; Code in critical section:

loop: pause

cmp eax, a

jne loop

jmp lock

3.4.1.3 静态预测¶

在BTB中没有历史的分支(参考3.4.1节“分支预测优化”)使用一个静态预测算法来预测:

- 预测无条件分支将被采用

- 预测间接分支将不被采用

下面规则适用于静态消除:

Assembly/Compiler编程规则3.(影响M, 普遍性H) 安排代码与静态分支预测算法一致: 使紧跟条件分支的fall-through代码成为带有前向目标分支的likely目标, 或使其成为带有后向目标分支的unlikely目标.

例子3-5展示了静态分支预测算法. 一个IF-THEN条件的主体被预测.

例子3-5. 静态分支预测算法:

// Forward condition branches not taken (fall through)

IF<condition> {....

↓

}

IF<condition> {...

↓

}

// Backward conditional branches are taken

LOOP {...

↑ −− }<condition>

// Unconditional branches taken

JMP

------>

zzq注解 fall-through就是像switch/case语句里的default那样的情况

例3-6与例3-7提供了用于静态预测算法的基本规则. 在例子3-6中, 后向分支(jc Begin) 第一次通过时不在BTB里;因此, BTB不会发布一个预测. 不过, 静态预测器预测该分支将被采用, 因此不会发生误预测.

例子3-6. 静态的采用预测:

Begin: mov eax, mem32

and eax, ebx

imul eax, edx

shld eax, 7

jc Begin

在例子3-7中第一个分支指令(jc Begin)是一个条件前向分支. 在第一次通过时它不在BTB里, 但静态预测器将预测这个分支将fall through. 静态预测算法正确地预测call Convert指令将被采用, 即使在该分支在BTB中拥有任何分支历史之前.

例子3-7. 静态的不采用预测

mov eax, mem32

and eax, ebx

imul eax, edx

shld eax, 7

jc Begin

mov eax, 0

Begin: call Convert

Intel Core微架构不使用静态预测启发式. 不过, 为了维持Intel 64与IA-32处理器间的一致性, 软件应该缺省维护这个静态预测启发式.

3.4.1.4. 内联, 调用与返回¶

返回地址栈机制(return address stack mechanism)扩充了静态与动态预测器来特别地优化调用与返回. 它有16项, 足以覆盖大多数程序的调用深度. 如果存在一条一连串超过16个嵌套调用与返回的链, 性能可能会恶化.

在Intel NetBurst微架构中追踪缓存(Trace Cache)为调用与返回维护分支预测信息. 只要调用或返回的追踪维持在追踪缓存里, 调用与返回目标维持不变, 上面描述的返回地址栈的深度限制将不会妨碍性能.

要启用返回栈机制, 调用与返回必须成对匹配. 如果这完成了, 以一个影响性能的方式超出栈深度的可能性非常低.

下面规则适用于内联, 调用及返回:

Assembly/Compiler编程规则4.(影响MH, 普遍性MH) 近程调用(near call)必须与近程返回匹配, 而远程调用(far call)必须与远程返回匹配. 将返回地址压栈并跳转到被调用的例程是不建议的, 因为它创建了调用与返回的一个失配.

调用与返回是高代价的;出于以下原因使用内联:

- 可消除参数传递开销

- 在编译器中, 内联函数可带来更多优化机会

- 如果被内联的例程包含分支, 调用者额外的上下文可以提高例程内分支预测

- 在小函数里, 如果该函数被内联了, 一个误预测分支导致的性能损失更小

Assembly/Compiler编程规则5.(影响MH, 普遍性MH) 选择性内联一个函数, 如果这样做会降低代码大小, 或者如果这是小函数且该调用点经常被执行.

Assembly/Compiler编程规则6.(影响H, 普遍性H) 不要内联函数, 如果这样做会使工作集大小超出可以放入追踪缓存的程度.

Assembly/Compiler编程规则7.(影响ML, 普遍性ML) 如果存在一连串超过16个嵌套调用与返回;考虑使用内联改变程序以减少调用深度.

Assembly/Compiler编程规则8.(影响ML, 普遍性ML) 倾向内联包含低预测率分支的小函数. 如果分支误预测导致RETURN被过早预测为被采用, 可能会导致性能损失.

Assembly/Compiler编程规则9.(影响L, 普遍性L) 如果函数最后的语句是对另一个函数的调用, 考虑将该调用转换为跳转(jump). 这将节省该调用/返回开销和return stack buffer中的项目.

Assembly/Compiler编程规则10.(影响M, 普遍性L) 不要在一个16字节块里放4个以上分支.

Assembly/Compiler编程规则11.(影响M, 普遍性L) 不要在一个16字节块里放2个以上循环结束分支(end loop branch).

3.4.1.5 代码对齐¶

小心安排代码可以提高缓存与内存的局部性. 可能(被预测执行的?)的基本块序列应在内存里连续放置. 这可能涉及从该序列移除不可能(被执行的)的代码, 比如处理错误条件的代码. 参考3.7节“预取”, 关于优化指令预取器的部分.

Assembly/Compiler编程规则12.(影响M, 普遍性H) When executing code from the DSB(Decoded Icache), direct branches that are mostly taken should have all their instruction bytes in a 64B cache line and nearer the end of that cache line. Their targets should be at or near the beginning of a 64B cache line.

When executing code from the legacy decode pipeline, direct branches that are mostly taken should have all their instruction bytes in a 16B aligned chunk of memory and nearer the end of that 16B aligned chunk. Their targets should be at or near the beginning of a 16B aligned chunk of memory.

Assembly/Compiler编程规则13.(影响M, 普遍性H) 如果一块条件代码不太可能执行, 它应该被放在程序的另一个部分. 如果它非常可能不执行, 而且代码局部性是个问题, 它应该被放在不同的代码页.

3.4.1.6 分支类型选择¶

间接分支与调用的缺省预测目标是fall-through路径. 当该分支有可用的硬件预测时, fall-through预测会被覆盖. 对一个间接分支, 分支预测硬件的预测分支目标是之前执行的分支目标.

归咎于不良的代码局部性或病态的分支冲突问题, 如果没有分支预测可用, fall-through路径的缺省预测才是一个大问题. 对于间接调用, 预测fall-through路径通常不是问题, 因为, 执行将可能回到相关返回之后的指令.

在间接分支后立即放置数据会导致性能问题. 如果该数据是全0, 它看起来像一长串对内存目的地址的ADD, 这会导致资源冲突并减慢分支恢复.类似, 紧跟间接分支的数据对分支预测硬件看起来可能像分支, 会造成跳转执行其他数据页. 这会导致后续的自修改(self-modifying)代码问题.

Assembly/Compiler编程规则14.(影响M, 普遍性L) 在出现间接分支时, 尝试使一个间接分支最有可能的目标紧跟在该间接分支后. 或者, 如果间接分支是普遍的, 但它们不能由分支预测硬件预测, 以一条UD2指令根在间接分支后, 它将阻止处理器顺着fall-through路径解码.

从代码构造(比如switch语句, 计算的GOTO(computed GOTO)或通过指针的调用)导致的间接分支可以跳转到任意数目的位置. 如果代码序列是这样的, 大多数时间一个分支的目标是相同的地址, 那么BTB在大多数时间将预测准确. 因为仅被采用(非fall-through)目标会保存在BTB里, 带有多个被采用目标的间接分支会有更低的预测率.

通过引入额外的条件分支, 所保存目标的实际数目可以被增加. 向一个目标添加一个条件分支是有成效的, 如果:

- 分支目标(direction)与导致该分支的分支历史有关;也就是说, 不仅是最后的目标, 还有如何来到这个分支

- 源/目标对足够常见, 值得使用额外的分支预测能力.这可能增加总体的分支误预测数, 同时改进间接分支的误预测.如果误预测分支数目非常大, 收益率下降

User/Source编程规则1.(影响M, 普遍性L) 如果间接分支具有2个以上经常采用的目标, 并且其中至少一个是与分支历史有关, 那么将该间接分支转换为一棵树, 其中在一个或多个间接分支之前是这些目标的条件分支.对与分支历史有关的间接分支的常用目标应用这个“剥离”.

这个规则的目的是通过提升分支的可预测性(即使以添加更多分支为代价)来减少误预测的总数. 添加的分支必须是可预测的. 这样可预测性的一个原因是与前面分支历史的强关联. 也就是说, 前面分支采取的目标是考虑中分支目标的良好指示.

例子3-8显示了一个间接分支目标与前面的一个条件分支目标相关的简单例子.

例子3-8. 带有两个倾向目标的间接分支:

function ()

{

int n = rand(); // random integer 0 to RAND_MAX

if ( ! (n & 0x01) ) { // n will be 0 half the times

n = 0; // updates branch history to predict taken

}

// indirect branches with multiple taken targets

// may have lower prediction rates

switch (n) {

case 0: handle_0(); break; // common target, correlated with

// branch history that is forward taken

case 1: handle_1(); break; // uncommon

case 3: handle_3(); break; // uncommon

default: handle_other(); // common target

}

}

很难通过分析确定相关性, 对于编译器及汇编语言程序员. 评估剥离以及不剥离时的性能, 从一个编程工作得到最好的性能, 可能是富有成效的.

以相关分支历史剥离一个间接分支的最受青睐目标的一个例子显示在例子3-9中.

例子3-9. 降低间接分支误预测的一个剥离技术:

function ()

{

int n = rand(); // Random integer 0 to RAND_MAX

if( ! (n & 0x01) ) THEN

n = 0; // n will be 0 half the times

if (!n) THEN

handle_0(); // Peel out the most common target

// with correlated branch history

else {

switch (n) {

case 1: handle_1(); break; // Uncommon

case 3: handle_3(); break; // Uncommon

default: handle_other(); // Make the favored target in

// the fall-through path

}

}

}

3.4.1.7 循环展开¶

展开循环的好处有:

- 展开分摊了分支的开销, 因为它消除了分支以及一部分管理induction variable的代码

- 展开允许主动地调度(或流水线化)该循环以隐藏时延. 随着依赖链延伸展露出关键路径, 如果你有足够的空闲寄存器来保存变量的生命期, 这是有用的

- 展开向其他优化手段展露出代码, 比如移除重复的load, 公共子表达式消除等

zzq注解 Induction variable:

In computer science, an induction variable is a variable that gets increased

or decreased by a fixed amount on every iteration of a loop, or is a linear

function of another induction variable.For example, in the following loop,

i and j are induction variables:

循环展开的潜在代价是:

- 过度展开或展开非常大的循环会导致代码尺寸增加. 如果展开后循环不再能够放入Trace Cache(TC), 这是有害的

- 展开包含分支的循环增加了对BTB容量的需求. 如果展开后循环的迭代次数是16或更少, 分支预测器应该能够正确地预测在方向更迭(alternate direction)的循环体中的分支

Assembly/Compiler编程规则15.(影响H, 普遍性M) 展开小的循环, 直到分支与归纳变量的开销占据(通常)不到10%的循环执行时间.

Assembly/Compiler编程规则16.(影响H, 普遍性M) 避免过度展开循环;这可能冲击(thrash)追踪缓存或指令缓存.

Assembly/Compiler编程规则17.(影响M, 普遍性M) 展开经常执行且有可预测迭代次数的循环, 将迭代次数降到16以下, 包括16. 除非它增加了代码大小, 使得工作集不再能放入追踪或指令缓存. 如果循环体包含多个条件分支, 那么展开使得迭代次数是16/(条件分支数).

例子3-10显示了循环展开如何使得其他优化成为可能.

例子3-10. 循环展开

// 展开前:

do i = 1, 100

if ( i mod 2 == 0 ) then a( i ) = x

else a( i ) = y

enddo

// 展开后:

do i = 1, 100, 2

a( i ) = y

a( i+1 ) = x

enddo

在这个例子中, 循环执行100次将X赋给每个偶数元素, 将Y赋给每个奇数元素. 通过展开循环, 你可以更高效地进行赋值, 在循环体内移除了一个分支.

3.4.1.8 分支预测的编译器支持¶

编译器能为Intel处理器生成提高分支预测效率的代码. Intel C++编译器采取的手段包括:

- 将代码与数据保持在不同的页

- 使用条件移动指令来消除分支

- 生成与静态分支预测算法一致的代码

- 在合适的地方进行内联

- 如果迭代次数可预测, 展开循环

使用分析器指导优化(PGO), 编译器可以布置基本块, 消除一个函数最频繁执行路径上的分支, 或至少提高它们的可预测性. 在源代码级别, 无需担心分支预测. 更多信息, 参考Intel C++编译器文档.

3.4.2 取指与解码优化¶

Intel Core微架构提供了几个机制来增加前端吞吐量. 利用这些特性的技术在下文中讨论.

3.4.2.1 对微融合(micro-fusion)的优化¶

操作寄存器和内存操作数的指令解码得到的微操作比对应的”寄存器-寄存器”版本要多. 使用”寄存器-寄存器”版本替换前者指令等效的工作通常要求一个2条指令的序列. 这个序列很可能导致取指带宽的降低.

Assembly/Compiler编程规则18.(影响ML, 普遍性M) 要提高取指/解码吞吐率, 优先使用内存风格而非仅寄存器风格的指令, 如果该指令可从微融合中受益的话.

下面例子是可以由所有的解码器处理的某些微融合类型:

所有对内存的写, 包括写立即数. 在内部写执行两个独立的微操作: 写地址(store-address) 与写数据(store-data).

所有在寄存器与内存间进行“read-modify”(load+op)的指令, 例如:

ADDPS XMM9, OWORD PTR[RSP+40] FADD DOUBLE PTR [RDI+RSI*8] XOR RAX, QWORD PTR [RBP+32]

所有形式为“读且跳转”的指令, 例如:

JMP [RDI+200] RET

带有立即操作数与内存操作数的CMP与TEST.

带有RIP相对取址的Intel 64指令, 在以下情形中不会被微融合:

在需要一个额外的立即数时, 例如:

CMP [RIP+400], 27 MOV [RIP+3000], 142

当需要一个RIP用于控制流目的时, 例如:

JMP [RIP+5000000]

在这些情形里, Intel Core微架构以及Intel微架构Sandy Bridge从解码器0提供一个2微操作的流, 导致了解码带宽是轻微损失, 因为2微操作的流必须从与之匹配(it was aligned with)的解码器前进到解码器0.

在访问全局数据时, RIP取址是常见的. 因为它不能从微融合获益, 编译器可能考虑以其他内存取址方式访问全局数据.

3.4.2.2 对宏融合(macro-fusion)的优化¶

宏融合将两条指令合并为一个微操作. Intel Core微架构在有限的情形会完成这种硬件优化.

宏融合对的第一条指令必须是一条CMP或TEST指令. 这条指令可以是REG-REG, REG-IMM, 或是一个微融合的REG-MEM比较. 第二条指令(在指令流中相邻)应该是一个条件分支.

因为这些对在基本的迭代式编程序列中是常见的, 即使在非重新编译的二进制代码中宏融合也能提高性能. 所有的解码器每周期可以解码一个宏融合对, 连同最多3条其他指令, 形成每周期5条指令的解码带宽峰值.

每条宏融合后指令使用单个分发执行. 这个过程降低了时延, 因为从分支误预测的损失中移除了一个周期. 软件还可以获得其他的融合好处: 增加rename与retire带宽, 储存更多正在进行的(in-flight)指令, 或从更少比特表示更多工作中带来能耗降低.

下面的列表给出你何时可以使用宏融合的细节:

在进行比较时, CMP或TEST可以被融合:

REG-REG. 例如: CMP EAX,ECX; JZ label REG-IMM. 例如: CMP EAX,0x80; JZ label REG-MEM. 例如: CMP EAX,[ECX]; JZ label MEM-REG. 例如: CMP [EAX],ECX; JZ label

使用所有条件跳转的TEST可以被融合.

在Intel Core微架构中, 仅使用以下条件跳转的CMP可以被融合.这些条件跳转检查进位标记 (CF)或零标记(ZF).能够进行宏融合的条件跳转是:

JA或JNBE JAE或JNB或JNC JE或JZ JNA或JBE JNAE或JC or JB JNE或JNZ

在比较MEM-IMM时(比如CMP [EAX], 0X80; JZ label), CMP与TEST不能被融合. Intel Core微架构在64位模式中不支持宏融合.

Intel微架构Nehalem在宏融合中支持以下增强:

带有以下条件跳转的CMP可以被融合(在Intel Core微架构中不支持):

JL或JNGE JGE或JNL JLE或JNG JG或JNLE

在64位模式在支持宏融合.

在Intel微架构Sandy Bridge中增强的宏融合支持总结在表3-1中, 在2.3.2.1节与例子3-15中有额外的信息.

表3-1. 在Intel微架构Sandy Bridge中可宏融合指令

| 指令 | TEST | AND | CMP | ADD | SUB | INC | DEC |

|---|---|---|---|---|---|---|---|

| JO/JNO | Y | Y | N | N | N | N | N |

| JC/JB/JAE/JNB | Y | Y | Y | Y | Y | N | N |

| JE/JZ/JNE/JNZ | Y | Y | Y | Y | Y | Y | Y |

| JNA/JBE/JA/JNBE | Y | Y | Y | Y | Y | N | N |

| JS/JNS/JP/JPE/JNP/JPO | Y | Y | N | N | N | N | N |

| JL/JNGE/JGE/JNL/JLE/JNG/JG/JNLE | Y | Y | Y | Y | Y | Y | Y |

Assembly/Compiler编程规则19.(影响M, 普遍性ML) 尽可能应用宏融合, 使用支持宏融合的指令对. 如果可能优先使用TEST. 在可能时使用 无符号 变量以及 无符号

跳转. 尝试逻辑化地验证一个变量在比较时刻是非负的. 在可能时避免MEM-IMM形式的CMP或TEST. 不过, 不要添加任何指令来避免使用MEM-IMM形式的指令.

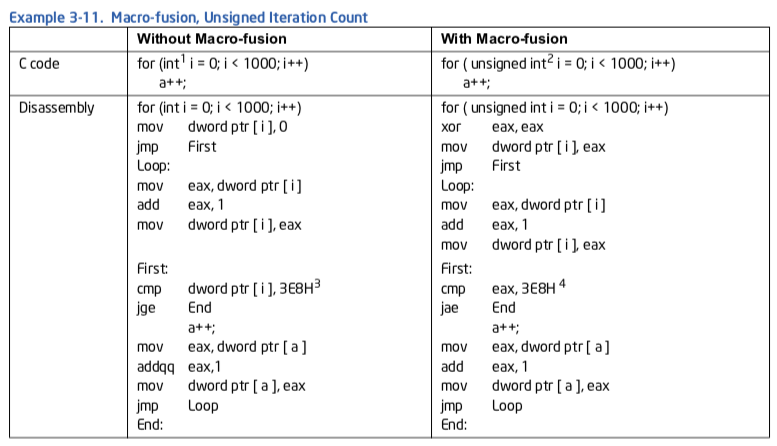

例子3-11. 宏融合, 无符号迭代计数

注:

- 有符号迭代子抵制了macro-fusion

- 无符号迭代子兼容macro-fusion

- CMP MEM-IMM, JGE 抑制 macro-fusion.

- CMP REG-IMM, JAE 允许 macro-fusion.

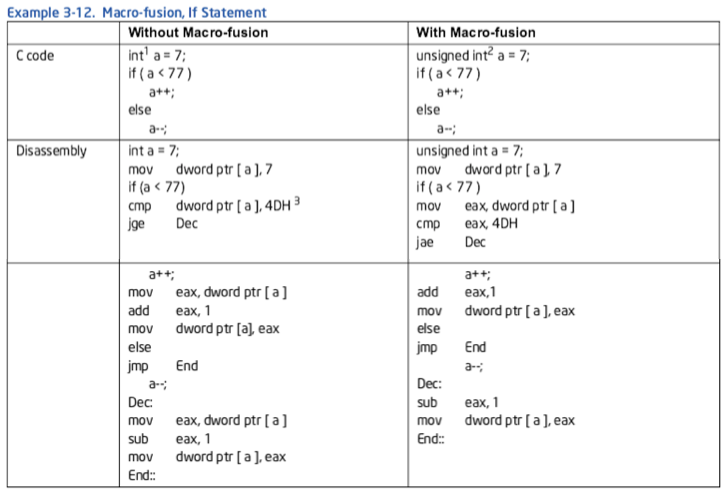

例子3-12. 宏融合, if语句

注:

- 有符号迭代子抵制了macro-fusion

- 无符号迭代子兼容macro-fusion

- CMP MEM-IMM, JGE 抑制 macro-fusion.

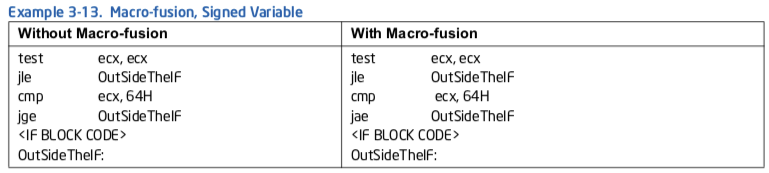

Assembly/Compiler编程规则20.(影响M, 普遍性ML) 当可以在逻辑上确定一个变量在比较时刻是非负的, 软件可以启用宏融合;在比较一个变量与0时, 适当地使用TEST来启用宏融合.

例子3-13. 宏融合, 有符号变量

对于有符号或无符号变量“a”;就标志位(flags)而言, “CMP a, 0”与“TEST a, a”产生相同的结果. 因为TEST更容易宏融合, 为了启用宏融合, 软件可以使用“TEST a, a”替换“CMP a, 0”.

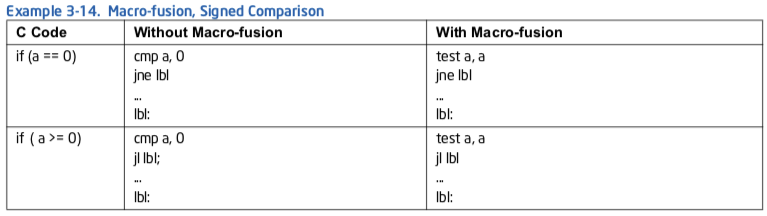

例子3-14. 宏融合, 有符号比较

Intel微架构Sandy Bridge使更多使用条件分支的算术与逻辑指令能宏融合. 在循环中在ALU端口已经拥塞的地方, 执行其中一个宏融合可以缓解压力, 因为宏融合指令仅消耗端口5, 而不是一个ALU端口加上端口5.

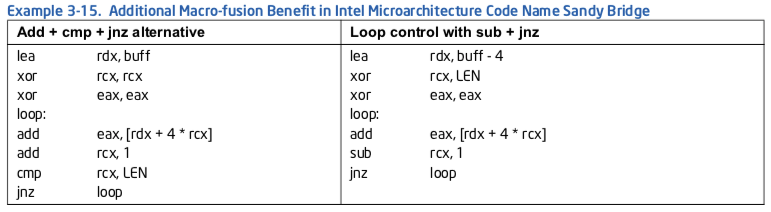

在例子3-15中, 循环“add/cmp/jnz”包含了两个可以通过端口0,1,5分发的ALU指令. 因此端口5绑定其中一条ALU指令的可能性更高, 导致JNZ等一个周期. 循环“sub/jnz”, ADD/SUB/JNZ可以在同一个周期里分发的可能性增加了, 因为仅SUB可绑定到端口0, 1, 5.

例子3-15. 在Intel微架构Sandy Bridge中额外的宏融合好处

3.4.2.3 长度改变前缀(Length-Changing Prefixes: LCP)¶

一条指令的长度最多可以是15个字节. 某些前缀可以动态地改变解码器所知道的一条指令的长度. 通常, 预解码单元将假定没有LCP, 估计指令流中一条指令的长度. 当预解码器在取指行中遇到一个LCP时, 它必须使用一个更慢的长度解码算法. 使用这个更慢的解码算法, 预解码器在6个周期里解码取指行, 而不是通常的1周期. 机器流水线正常排队吞吐率通常不能隐藏LCP带来的性能损失.

可以动态改变一条指令长度的前缀包括:

- 操作数大小前缀(0x66)

- 地址大小前缀(0x67)

在基于Intel Core微架构的处理器以及在Intel Core Duo与Intel Core Solo处理器中, 指令MOV DX, 01234h受制于LCP暂停(stall). 包含imm16作为固定编码部分, 但不需要LCP来改变这个立即数大小的指令不受LCP暂停的影响. 在64位模式中, REX前缀(4xh)可以改变两类指令的大小, 但不会导致一个LCP性能损失.

如果在一个紧凑的循环中发生LCP暂停, 它会导致显著的性能下降.当解码不受一个瓶颈时, 就像在有大量浮点的代码中, 孤立的LCP暂停通常不会造成性能下降.

Assembly/Compiler编程规则21.(影响MH, 普遍性MH) 倾向生成使用imm8或imm32值, 而不是imm16值的代码.

如果需要imm16, 将相同的imm32读入一个寄存器并使用寄存器中字的值.

两次LCP暂停

受制于LCP暂停且跨越一条16字节取指行边界的指令会导致LCP暂停被触发两次. 以下对齐情况会触发两次LCP暂停:

- 使用一个MODR/M与SIB字节编码的一条指令, 且取指行边界在MODR/M与SIB字节之间.

- 使用寄存器与立即数字节偏移取址模式访问内存, 从取指行偏移13处开始的一条指令.

第一次暂停是对第一个取指行, 第二次暂停是对第二个取指行.两次LCP暂停导致一个11周期的解码性能损失.

下面的例子导致LCP暂停一次, 不管指令第一个字节在取指行的位置:

ADD word ptr [EDX], 01234H

ADD word ptr 012345678H[EDX], 01234H

ADD word ptr [012345678H], 01234H

以下指令在取指行偏移13处开始时, 导致两次LCP暂停:

ADD word ptr [EDX+ESI], 01234H

ADD word ptr 012H[EDX], 01234H

ADD word ptr 012345678H[EDX+ESI], 01234H

为了避免两次LCP暂停, 不要使用受制于LCP暂停, 使用SIB字节编码或字节位移取址 (byte displacement)模式的指令.

伪LCP暂停(False LCP Stall)

伪LCP暂停具有与LCP暂停相同的特征, 但发生在没有任何imm16值的指令上.

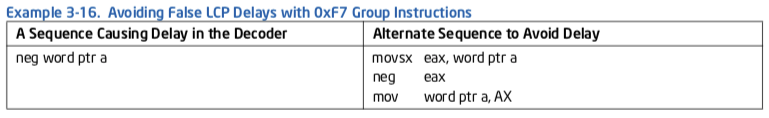

当(a)带有LCP、使用F7操作码编码的指令, (b)位于取指行的偏移14处时, 发生伪LCP暂停. 这些指令是: not, neg, div, idiv, mul与imul. 伪LCP造成时延, 因为在下一个取指行之前指令长度解码器不能确定指令的长度, 这个取指行持有在指令MODR/M字节中的实际操作码.

以下技术可以辅助避免伪LCP暂停:

- 将F7指令组的所有短操作提升为长操作, 使用完整的32不比特版本.

- 确保F7操作码不出现在取指行的偏移14处.

Assembly/Compiler编程规则22.(影响M, 普遍性ML) 确保使用0xF7操作码字节的指令不在取指行的偏移4处开始;避免使用这些指令操作16位数据, 将短数据提升为32位.

例子3-16. 避免伴随0xF7指令组的伪LCP暂停

3.4.2.4 优化循环流检测器(LSD)¶

在Intel Core微架构中, 满足以下准则的循环由LSD检测, 并从指令队列重播(replay)来供给解码器.

- 必须不超过4个16字节的取指

- 必须不超过18条指令

- 可以包含不超过4被采用的分支, 并且它们不能是RET

- 通常应该具有超过64个迭代

在Intel微架构Nehalem中, 这样改进循环流寄存器:

- 在指令已解码队列(IDQ, 参考2.5.2节)中缓冲已解码微操作来供给重命名/分配阶段

- LSD的大小被增加到28个微操作

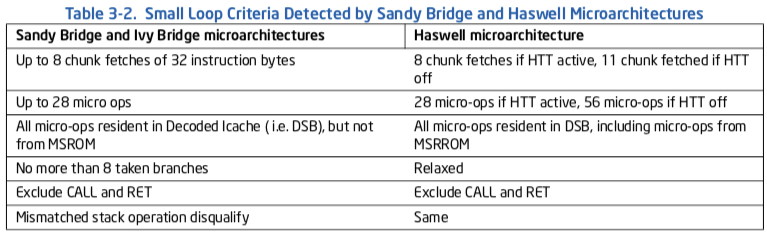

在Sandy Bridge与Haswell微架构中, LSD与微操作队列实现持续改进.它们具有如下特性:

表3-2. 由Sandy Bridge与Haswell微架构检测的小循环准则

许多计算密集循环, 搜索以及字符串移动符合这些特征. 这些循环超出了BPU预测能力, 总是导致一个分支误预测.

Assembly/Compiler编程规则23.(影响MH, 普遍性MH) 将长指令序列的循环分解为不超过LSD大小的短指令块的循环.

Assembly/Compiler编程规则24.(影响MH, 普遍性M) 如果展开后的块超过LSD的大小, 避免展开包含LCP暂停的循环.

3.4.2.5 在Intel Sandy Bridge中利用LSD微操作发布带宽¶

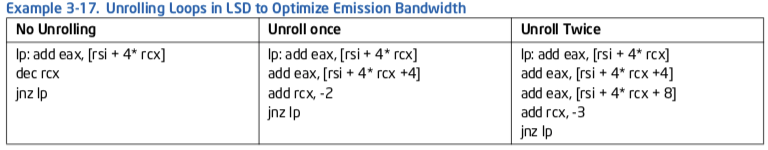

LSD持有构成小的“无限”循环的微操作. 来自LSD的微操作在乱序引擎中分配. 在LSD中的循环以一个回到循环开头的被采用分支结束. 在循环末尾的被采用分支总是在该周期中最后分配的微操作. 在循环开头的指令总是在下一个周期分配. 如果代码性能受限于前端带宽, 未使用的分配槽造成分配中的一个空泡, 并导致性能下降.

在Intel微架构Sandy Bridge中, 分配带宽是每周期4个微操作. 当LSD中的微操作数导致最少未使用分配槽时, 性能是最好的. 你可以使用循环展开来控制在LSD中的微操作数.

在例子3-17里, 代码对所有的数组元素求和.原始的代码每次迭代加一个元素. 每次迭代有3个微操作, 都在一个周期中分配.代码的吞吐率是每周期一个读.

在展开循环一次时, 每次迭代有5个微操作, 它们在2个周期中分配. 代码的吞吐率仍然是每周期一个读.因此没有性能提高.

在展开循环两次时, 每次迭代有7个微操作, 仍然在2个周期中分配. 因为在每个周期中可以执行两个读, 这个代码具有每两个周期3个读操作的潜在吞吐率.

例子3-17. 在LSD中展开循环优化发布带宽

3.4.2.6 为已解码ICache优化¶

已解码ICache是Intel微架构Sandy Bridge的一个新特性. 从已解码ICache运行代码有两个好处:

- 以更高的带宽为乱序引擎提供微操作

- 前端不需要解码在已解码ICache中的代码.这节省了能源

zzq注解 这里说已解码ICache应指的是前端里的Trace Cache.

在已解码ICache与legacy解码流水线间切换需要开销. 如果你的代码频繁地在前端与已解码ICache间切换, 性能损失会超过仅从遗留流水线运行.

要确保”热”代码从已解码ICache供给:

- 确保每块热代码少于500条指令.特别地, 在一个循环中不要展开超过500条指令. 这应该会激活已解码ICache存留, 即使启用了超线程.

- 对于在一个循环中有非常大块计算的应用程序, 考虑循环分解(loop-fission): 将循环分解为适合已解码ICache(大小)的多个循环, 而不是会溢出的单个循环.

- 如果程序可以确定每核仅运行一个线程, 它可以将热代码块大小增加到大约1000条指令.

密集的读-修改-写代码(dense read-modify-write code)

已解码ICache对每32字节对齐内存块仅可以保持最多18个微操作. 因此, 以少量字节编码, 但有许多微操作的指令高度集中的代码, 可能超过18个微操作的限制, 不能进入已解码ICache. 读-修改-写(RMW)指令是这样指令的一个良好例子.

RMW指令接受一个内存源操作数, 一个寄存器源操作数, 并使用内存源操作数作为目标. 相同的功能可以两到三条指令实现: 第一条读内存源操作数, 第二条使用第二个寄存器源操作数执行该操作, 最后的指令将结果写回内存. 这些指令通常导致相同数量的微操作, 但使用更多的字节来编码相同的功能.

一个可以广泛使用RMW指令的情形是当编译器积极地优化代码大小时.

下面是将热代码放入已解码ICache的某些可能解决方案:

- 以2到3条功能相同的指令替换RMW指令. 例如, “adc [rdi], rcx”只有3字节长;等效的序列“adc rax, [rdi]”+“mov [rdi], rax”是6个字节

- 对齐代码, 使密集部分被分开到两个32字节块. 在使用自动对齐代码的工具时, 这个解决方案是有用的, 并且不关心代码改变

- 通过在循环中添加多字节NOP展开代码. 注意这个解决方案对执行添加了微操作

为已解码ICache对齐无条件分支

对于进入已解码ICache的代码, 每个无条件分支是占据一个已解码ICache通道(way)的最后微操作. 因此, 每32字节对齐块仅3个无条件分支可以进入已解码ICache.

在跳转表与switch声明中无条件分支是频繁的. 下面是这些构造的例子, 以编写它们的方法, 使它们可以放入已解码ICache.

编译器为C++虚拟函数或DLL分发表创建跳转表. 每个无条件分支消耗5字节;因此最多7个无条件分支可与一个32字节块关联. 这样, 如果在每个32字节对齐块中无条件分支太密集, 跳转表可能不能放入已解码ICache. 这会导致在分支表前后执行的代码的性能下降.

解决方案是在分支表的分支中添加多个字节NOP指令. 这可能会增加代码大小, 小心使用. 不过, 这些NOP不会执行, 因此在后面的流水线阶段中不会有性能损失.

Switch-case构造代表了一个类似的情形. 每个case条件的求值导致一个无条件分支. 在放入一个对齐32字节块的每3条连续无条件分支上可以应用相同的多字节NOP方法.

在一个已解码ICache通道中的两个分支

已解码ICache可以在一个通道内保持两个分支. 在32字节对齐块中的密集分支, 或它们与其他指令的次序, 可能阻止该块中指令所有的微操作进入已解码ICache. 这不经常发生. 当它发生时, 你可以在代码合适的地方放置NOP指令. 要确保这些NOP指令表示热代码的部分.

Assembly/Compiler编程规则25.(影响M, 普遍性M) 避免在一个栈操作序列(POP, PUSH, CALL, RET)中放入对ESP的显式引用.

3.4.2.7 其他解码指引¶

Assembly/Compiler编程规则26.(影响ML, 普遍性L) 使用小于8字节长度的简单指令.

Assembly/Compiler编程规则27.(影响M, 普遍性MH) 避免使用改变立即数大小及位移 (displacement)的前缀.

长指令(超过7字节)会限制每周期解码指令数. 每个前缀增加指令长度1字节, 可能会限制解码器的吞吐率. 另外, 多前缀仅能由第一个解码器解码. 这些前缀也导致了解码时的时延. 如果不能避免改变立即数大小或位移的多前缀或一个前缀, 在因为其他原因暂停流水线的指令后调度它们.

3.5 优化Execution Core¶

现代处理器中的超标量(superscalar), 乱序执行包含多个执行硬件资源, 它可以并行地执行多条微指令. 这些资源通常保证微指令有效执行且时延固定. 利用并行度的一般指南是:

- 遵守3.4节中的规则, 最大化可用解码带宽和前端吞吐量. 这些规则包括有利的单micro-op指令和利用micro-fusion, 栈指针追踪器和macro-fusion

- 最大化rename带宽. 本节中讨论此项, 包括合理利用partial寄存器, ROB读端口以及对flags产生副作用的指令

- 在指令序列上调度recommendations以便多个依赖链同时存在于reservation station中, 这会保证你的代码利用最大的并行度

- 避免hazards, 最小化在执行核心中可能出现的时延, 让已分发的微指令快速地执行和回收

3.5.1 Instruction Selection¶

某些执行单元未被流水线化, 这竟味着微指令无法以连续的cycle分发, 吞吐量小于1每cycle.

一般可以先从每条指令关联的微指令的数量来考虑如何选择指令, 优先顺序是: 单micro-op指令, 小于4 micro-op的指令, 需要microsequencer ROM的指令(在microsequencer之外执行的micro-op需要额外开销)

Assembly/Compiler Coding Rule 28. (M impact, H generality) 优先使用单micro-op指令和时延更低的指令.

编译器一般会很好地进行指令选择, 这种情况下, 用户通常不需介入.

Assembly/Compiler Coding Rule 29. (M impact, L generality) 避免prefixes, 尤其是multiple non-0F-prefixed opcodes.

Assembly/Compiler Coding Rule 30. (M impact, L generality) 勿使用太多段寄存器.

Assembly/Compiler Coding Rule 31. (M impact, M generality) 避免使用复杂指令(如enter, leave, loop), 这些指令会产生4个以上的微指令, 需要更多cycle去解码; 使用简单指令序列替换之.

Assembly/Compiler Coding Rule 32. (MH impact, M generality) 使用push/pop来管理栈空间和处理函数调用/返回之间的调整, 而不要用enter/leave. 使用带有非0立即数的enter指令会在流水线中带来很大时延和误预测.

Theoretically, arranging instructions sequence to match the 4-1-1-1 template applies to processors based on Intel Core microarchitecture. However, with macro-fusion and micro-fusion capabilities in the front end, attempts to schedule instruction sequences using the 4-1-1-1 template will likely provide diminishing returns.

相反, 软件应遵守这些附加的解码器原则:

If you need to use multiple micro-op, non-microsequenced instructions, try to separate by a few single micro-op instructions. The following instructions are examples of multiple micro-op instruction not requiring micro-sequencer:

ADC/SBB CMOVcc Read-modify-write instructions

If a series of multiple micro-op instructions cannot be separated, try breaking the series into a different equivalent instruction sequence. For example, a series of read-modify-write instructions may go faster if sequenced as a series of read-modify + store instructions. This strategy could improve performance even if the new code sequence is larger than the original one.

3.5.1.1 Integer Divide¶

Typically, an integer divide is preceded by a CWD or CDQ instruction. Depending on the operand size, divide instructions use DX:AX or EDX:EAX for the dividend(被除数). The CWD or CDQ instructions sign-extend AX or EAX into DX or EDX, respectively. These instructions have denser encoding than a shift and move would be, but they generate the same number of micro-ops. If AX or EAX is known to be positive, replace these instructions with:

xor dx, dx

or

xor edx, edx

Modern compilers typically can transform high-level language expression involving integer division where the divisor(除数) is a known integer constant at compile time into a faster sequence using IMUL instruction instead. Thus programmers should minimize integer division expression with divisor whose value can not be known at compile time.

Alternately, if certain known divisor value are favored over other unknown ranges, software may consider isolating the few favored, known divisor value into constant-divisor expressions.

Section 10.2.4 describes more detail of using MUL/IMUL to replace integer divisions.

3.5.1.2 Using LEA¶

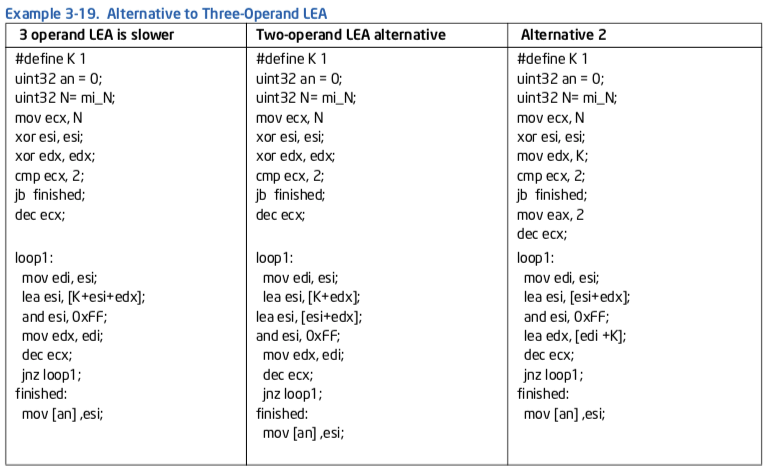

Intel Sandy Bridge微架构中, 对于LEA指令的性能有两项重大更改:

LEA can be dispatched via port 1 and 5 in most cases, doubling the throughput over prior generations. However this apply only to LEA instructions with one or two source operands.

For LEA instructions with three source operands and some specific situations, instruction latency has increased to 3 cycles, and must dispatch via port 1:

- LEA that has all three source operands: base, index, and offset.

- LEA that uses base and index registers where the base is EBP, RBP, or R13.

- LEA that uses RIP relative addressing mode.

- LEA that uses 16-bit addressing mode.

In some cases with processor based on Intel NetBurst microarchitecture, the LEA instruction or a sequence of LEA, ADD, SUB and SHIFT instructions can replace constant multiply instructions. The LEA instruction can also be used as a multiple operand addition instruction, for example

LEA ECX, [EAX + EBX + 4 + A]

Using LEA in this way may avoid register usage by not tying up registers for operands of arithmetic instructions. This use may also save code space.

If the LEA instruction uses a shift by a constant amount then the latency of the sequence of μops is shorter if adds are used instead of a shift, and the LEA instruction may be replaced with an appropriate sequence of μops. This, however, increases the total number of μops, leading to a trade-off.

Assembly/Compiler Coding Rule 33. (ML impact, L generality) If an LEA instruction using the scaled index is on the critical path, a sequence with ADDs may be better. If code density and bandwidth out of the trace cache are the critical factor, then use the LEA instruction.

3.5.1.3 ADC and SBB in Intel Microarchitecture Code Name Sandy Bridge¶

The throughput of ADC and SBB in Intel microarchitecture code name Sandy Bridge is 1 cycle, compared to 1.5-2 cycles in prior generation. These two instructions are useful in numeric handling of integer data types that are wider than the maximum width of native hardware.

3.5.1.4 Bitwise Rotation¶

Bitwise rotation can choose between rotate with count specified in the CL register, an immediate constant and by 1 bit. Generally, The rotate by immediate and rotate by register instructions are slower than rotate by 1 bit. The rotate by 1 instruction has the same latency as a shift.

Assembly/Compiler Coding Rule 34. (ML impact, L generality) Avoid ROTATE by register or ROTATE by immediate instructions. If possible, replace with a ROTATE by 1 instruction.

In Intel microarchitecture code name Sandy Bridge, ROL/ROR by immediate has 1-cycle throughput, SHLD/SHRD using the same register as source and destination by an immediate constant has 1-cycle latency with 0.5 cycle throughput. The “ROL/ROR reg, imm8” instruction has two micro-ops with the latency of 1-cycle for the rotate register result and 2-cycles for the flags, if used.

In Intel microarchitecture code name Ivy Bridge, The “ROL/ROR reg, imm8” instruction with immediate greater than 1, is one micro-op with one-cycle latency when the overflow flag result is used. When the immediate is one, dependency on the overflow flag result of ROL/ROR by a subsequent instruction will see the ROL/ROR instruction with two-cycle latency.

3.5.1.5 Variable Bit Count Rotation and Shift¶

In Intel microarchitecture code name Sandy Bridge, The “ROL/ROR/SHL/SHR reg, cl” instruction has three micro-ops. When the flag result is not needed, one of these micro-ops may be discarded, providing better performance in many common usages. When these instructions update partial flag results that are subsequently used, the full three micro-ops flow must go through the execution and retirement pipeline, experiencing slower performance. In Intel microarchitecture code name Ivy Bridge, executing the full three micro-ops flow to use the updated partial flag result has additional delay. Consider the looped sequence below:

loop:

shl eax, cl

add ebx, eax

dec edx ; DEC dosen't update carry, causing SHL to execute slower 3 micro-ops flow

jnz loop

The DEC instruction does not modify the carry flag. Consequently, the SHL EAX, CL instruction needs to execute the three micro-ops flow in subsequent iterations. The SUB instruction will update all flags. So replacing DEC with SUB will allow SHL EAX, CL to execute the two micro-ops flow.

3.5.1.6 Address Calculations¶

For computing addresses, use the addressing modes rather than general-purpose computations. Internally, memory reference instructions can have four operands:

- Relocatable load-time constant

- Immediate constant

- Base register

- Scaled index register

Note that the latency and throughput of LEA with more than two operands are slower (see Section 3.5.1.2) in Intel microarchitecture code name Sandy Bridge. Addressing modes that uses both base and index registers will consume more read port resource in the execution engine and may experience more stalls due to availability of read port resources. Software should take care by selecting the speedy version of address calculation.

In the segmented model, a segment register may constitute an additional operand in the linear address calculation. In many cases, several integer instructions can be eliminated by fully using the operands of memory references.

3.5.1.7 Clearing Registers and Dependency Breaking Idioms¶

Code sequences that modifies partial register can experience some delay in its dependency chain, but can be avoided by using dependency breaking idioms.

In processors based on Intel Core microarchitecture, a number of instructions can help clear execution dependency when software uses these instruction to clear register content to zero. The instructions include:

XOR REG, REG

SUB REG, REG

XORPS/PD XMMREG, XMMREG

PXOR XMMREG, XMMREG

SUBPS/PD XMMREG, XMMREG

PSUBB/W/D/Q XMMREG, XMMREG

In processors based on Intel microarchitecture code name Sandy Bridge, the instruction listed above plus equivalent AVX counter parts are also zero idioms that can be used to break dependency chains. Further- more, they do not consume an issue port or an execution unit. So using zero idioms are preferable than moving 0’s into the register. The AVX equivalent zero idioms are:

VXORPS/PD XMMREG, XMMREG

VXORPS/PD YMMREG, YMMREG

VPXOR XMMREG, XMMREG

VSUBPS/PD XMMREG, XMMREG

VSUBPS/PD YMMREG, YMMREG

VPSUBB/W/D/Q XMMREG, XMMREG

In Intel Core Solo and Intel Core Duo processors, the XOR, SUB, XORPS, or PXOR instructions can be used to clear execution dependencies on the zero evaluation of the destination register.

The Pentium 4 processor provides special support for XOR, SUB, and PXOR operations when executed within the same register. This recognizes that clearing a register does not depend on the old value of the register. The XORPS and XORPD instructions do not have this special support. They cannot be used to break dependence chains.

Assembly/Compiler Coding Rule 35. (M impact, ML generality) Use dependency-breaking-idiom instructions to set a register to 0, or to break a false dependence chain resulting from re-use of registers. In contexts where the condition codes must be preserved, move 0 into the register instead. This requires more code space than using XOR and SUB, but avoids setting the condition codes.

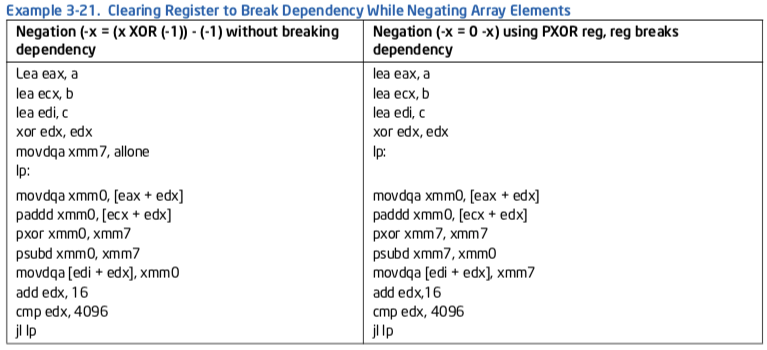

Example 3-21 of using pxor to break dependency idiom on a XMM register when performing negation on the elements of an array:

int a[4096], b[4096], c[4096];

for ( int i = 0; i < 4096; i++ )

C[i] = - ( a[i] + b[i] );

Assembly/Compiler Coding Rule 36. (M impact, MH generality) Break dependences on portions of registers between instructions by operating on 32-bit registers instead of partial registers. For moves, this can be accomplished with 32-bit moves or by using MOVZX.

Sometimes sign-extended semantics can be maintained by zero-extending operands. For example, the C code in the following statements does not need sign extension, nor does it need prefixes for operand size overrides:

static short INT a, b;

if (a == b) {

...

}

Code for comparing these 16-bit operands might be:

MOVZW EAX,[a]

MOVZW EBX,[b]

CMP EAX, EBX

These circumstances tend to be common. However, the technique will not work if the compare is for greater than, less than, greater than or equal, and so on, or if the values in eax or ebx are to be used in another operation where sign extension is required.

Assembly/Compiler Coding Rule 37. (M impact, M generality) Try to use zero extension or operate on 32-bit operands instead of using moves with sign extension.

The trace cache can be packed more tightly when instructions with operands that can only be repre- sented as 32 bits are not adjacent.

Assembly/Compiler Coding Rule 38. (ML impact, L generality) Avoid placing instructions that use 32-bit immediates which cannot be encoded as sign-extended 16-bit immediates near each other. Try to schedule μops that have no immediate immediately before or after μops with 32-bit immediates.

3.5.1.8 Compares¶

Use TEST when comparing a value in a register with zero. TEST essentially ANDs operands together without writing to a destination register. TEST is preferred over AND because AND produces an extra result register. TEST is better than CMP ..., 0 because the instruction size is smaller.

Use TEST when comparing the result of a logical AND with an immediate constant for equality or inequality if the register is EAX for cases such as:

IF (AVAR & 8) { }

The TEST instruction can also be used to detect rollover of modulo of a power of 2. For example, the C code:

IF ( (AVAR % 16) == 0 ) { }

can be implemented using:

TEST EAX, 0x0F

JNZ AfterIf

Using the TEST instruction between the instruction that may modify part of the flag register and the instruction that uses the flag register can also help prevent partial flag register stall.

Assembly/Compiler Coding Rule 39. (ML impact, M generality) Use the TEST instruction instead of AND when the result of the logical AND is not used. This saves μops in execution. Use a TEST of a register with itself instead of a CMP of the register to zero, this saves the need to encode the zero and saves encoding space. Avoid comparing a constant to a memory operand. It is preferable to load the memory operand and compare the constant to a register.

Often a produced value must be compared with zero, and then used in a branch. Because most Intel architecture instructions set the condition codes as part of their execution, the compare instruction may be eliminated. Thus the operation can be tested directly by a JCC instruction. The notable exceptions are MOV and LEA. In these cases, use TEST.

Assembly/Compiler Coding Rule 40. (ML impact, M generality) Eliminate unnecessary compare with zero instructions by using the appropriate conditional jump instruction when the flags are already set by a preceding arithmetic instruction. If necessary, use a TEST instruction instead of a compare. Be certain that any code transformations made do not introduce problems with overflow.

3.5.1.9 Using NOPs¶

Code generators generate a no-operation (NOP) to align instructions. Examples of NOPs of different lengths in 32-bit mode are shown below:

1-byte: XCHG EAX, EAX

2-byte: 66 NOP

3-byte: LEA REG, 0 (REG) (8-bit displacement)

4-byte: NOP DWORD PTR [EAX + 0] (8-bit displacement)

5-byte: NOP DWORD PTR [EAX + EAX*1 + 0] (8-bit displacement)

6-byte: LEA REG, 0 (REG) (32-bit displacement)

7-byte: NOP DWORD PTR [EAX + 0] (32-bit displacement)

8-byte: NOP DWORD PTR [EAX + EAX*1 + 0] (32-bit displacement)

9-byte: NOP WORD PTR [EAX + EAX*1 + 0] (32-bit displacement)

These are all true NOPs, having no effect on the state of the machine except to advance the EIP. Because NOPs require hardware resources to decode and execute, use the fewest number to achieve the desired padding.

The one byte NOP:[XCHG EAX,EAX] has special hardware support. Although it still consumes a μop and its accompanying resources, the dependence upon the old value of EAX is removed. This μop can be executed at the earliest possible opportunity, reducing the number of outstanding instructions and is the lowest cost NOP.

The other NOPs have no special hardware support. Their input and output registers are interpreted by the hardware. Therefore, a code generator should arrange to use the register containing the oldest value as input, so that the NOP will dispatch and release RS resources at the earliest possible opportunity.

Try to observe the following NOP generation priority:

- Select the smallest number of NOPs and pseudo-NOPs to provide the desired padding.

- Select NOPs that are least likely to execute on slower execution unit clusters.

- Select the register arguments of NOPs to reduce dependencies.

3.5.1.10 Mixing SIMD Data Types¶

Previous microarchitectures (before Intel Core microarchitecture) do not have explicit restrictions on mixing integer and floating-point (FP) operations on XMM registers. For Intel Core microarchitecture, mixing integer and floating-point operations on the content of an XMM register can degrade performance. Software should avoid mixed-use of integer/FP operation on XMM registers. Specifically:

- Use SIMD integer operations to feed SIMD integer operations. Use PXOR for idiom.

- Use SIMD floating-point operations to feed SIMD floating-point operations. Use XORPS for idiom.

- When floating-point operations are bitwise equivalent, use PS data type instead of PD data type. MOVAPS and MOVAPD do the same thing, but MOVAPS takes one less byte to encode the instruction.

3.5.1.11 Spill Scheduling¶

The spill scheduling algorithm used by a code generator will be impacted by the memory subsystem. A spill scheduling algorithm is an algorithm that selects what values to spill to memory when there are too many live values to fit in registers. Consider the code in Example 3-22, where it is necessary to spill either A, B, or C.

Example 3-22. Spill Scheduling Code:

LOOP

C := ...

B := ...

A := A + ...

For modern microarchitectures, using dependence depth information in spill scheduling is even more important than in previous processors. The loop-carried dependence in A makes it especially important that A not be spilled. Not only would a store/load be placed in the dependence chain, but there would also be a data-not-ready stall of the load, costing further cycles.

Assembly/Compiler Coding Rule 41. (H impact, MH generality) For small loops, placing loop invariants in memory is better than spilling loop-carried dependencies.

A possibly counter-intuitive result is that in such a situation it is better to put loop invariants in memory than in registers, since loop invariants never have a load blocked by store data that is not ready.

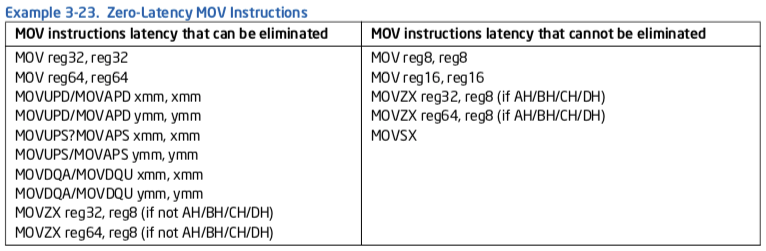

3.5.1.12 Zero-Latency MOV Instructions¶

In processors based on Intel microarchitecture code name Ivy Bridge, a subset of register-to-register move operations are executed in the front end (similar to zero-idioms, see Section 3.5.1.7). This conserves scheduling/execution resources in the out-of-order engine. Most forms of register-to-register

MOV instructions can benefit from zero-latency MOV. Example 3-23 list the details of those forms that qualify and a small set that do not.

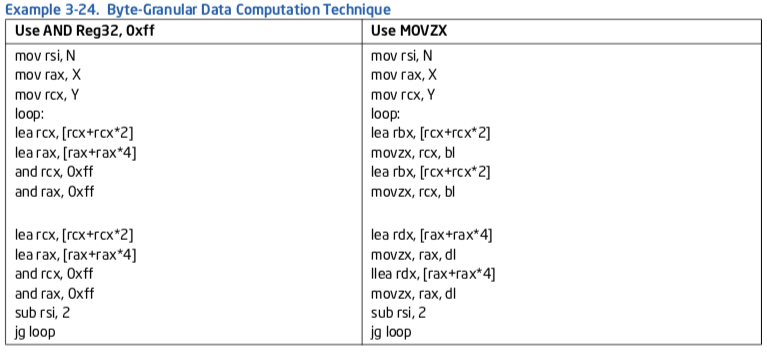

Example 3-24 shows how to process 8-bit integers using MOVZX to take advantage of zero-latency MOV enhancement. Consider:

X = (X * 3^N ) MOD 256;

Y = (Y * 3^N ) MOD 256;

When “MOD 256” is implemented using the “AND 0xff” technique, its latency is exposed in the result- dependency chain. Using a form of MOVZX on a truncated byte input, it can take advantage of zero- latency MOV enhancement and gain about 45% in speed.

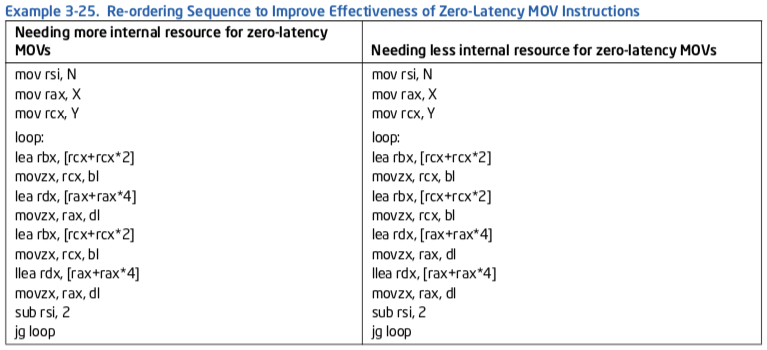

The effectiveness of coding a dense sequence of instructions to rely on a zero-latency MOV instruction must also consider internal resource constraints in the microarchitecture.

In Example 3-25, RBX/RCX and RDX/RAX are pairs of registers that are shared and continuously over- written. In the right-hand sequence, registers are overwritten with new results immediately, consuming less internal resources provided by the underlying microarchitecture. As a result, it is about 8% faster than the left-hand sequence where internal resources could only support 50% of the attempt to take advantage of zero-latency MOV instructions.

3.5.2 Avoiding Stalls in Execution Core¶

Although the design of the execution core is optimized to make common cases executes quickly. A micro-op may encounter various hazards, delays, or stalls while making forward progress from the front end to the ROB and RS. The significant cases are:

- ROB Read Port Stalls

- Partial Register Reference Stalls

- Partial Updates to XMM Register Stalls

- Partial Flag Register Reference Stalls

3.5.2.1 ROB Read Port Stalls¶

As a micro-op is renamed, it determines whether its source operands have executed and been written to the reorder buffer (ROB), or whether they will be captured “in flight” in the RS or in the bypass network. Typically, the great majority of source operands are found to be “in flight” during renaming. Those that have been written back to the ROB are read through a set of read ports.

Since the Intel Core microarchitecture is optimized for the common case where the operands are “in flight”, it does not provide a full set of read ports to enable all renamed micro-ops to read all sources from the ROB in the same cycle.

When not all sources can be read, a micro-op can stall in the rename stage until it can get access to enough ROB read ports to complete renaming the micro-op. This stall is usually short-lived. Typically, a micro-op will complete renaming in the next cycle, but it appears to the application as a loss of rename bandwidth.

Some of the software-visible situations that can cause ROB read port stalls include:

- Registers that have become cold and require a ROB read port because execution units are doing other independent calculations.

- Constants inside registers.

- Pointer and index registers.

In rare cases, ROB read port stalls may lead to more significant performance degradations. There are a couple of heuristics that can help prevent over-subscribing the ROB read ports:

- Keep common register usage clustered together. Multiple references to the same written-back register can be “folded” inside the out of order execution core.

- Keep short dependency chains intact. This practice ensures that the registers will not have been written back when the new micro-ops are written to the RS.

These two scheduling heuristics may conflict with other more common scheduling heuristics. To reduce demand on the ROB read port, use these two heuristics only if both the following situations are met:

- Short latency operations.

- Indications of actual ROB read port stalls can be confirmed by measurements of the performance event (the relevant event is RAT_STALLS.ROB_READ_PORT, see Chapter 19 of the Intel® 64 and IA-32 Architectures Software Developer’s Manual, Volume 3B).

If the code has a long dependency chain, these two heuristics should not be used because they can cause the RS to fill, causing damage that outweighs the positive effects of reducing demands on the ROB read port.

Starting with Intel microarchitecture code name Sandy Bridge, ROB port stall no longer applies because data is read from the physical register file.

3.5.2.2 Writeback Bus Conflicts¶

The writeback bus inside the execution engine is a common resource needed to facilitate out-of-order execution of micro-ops in flight. When the writeback bus is needed at the same time by two micro-ops executing in the same stack of execution units (see Table 2-17), the younger micro-op will have to wait for the writeback bus to be available. This situation typically will be more likely for short-latency instruc- tions experience a delay when it might have been otherwise ready for dispatching into the execution engine.

Consider a repeating sequence of independent floating-point ADDs with a single-cycle MOV bound to the same dispatch port. When the MOV finds the dispatch port available, the writeback bus can be occupied by the ADD. This delays the MOV operation.

If this problem is detected, you can sometimes change the instruction selection to use a different dispatch port and reduce the writeback contention.

3.5.2.3 Bypass between Execution Domains¶

Floating-point (FP) loads have an extra cycle of latency. Moves between FP and SIMD stacks have another additional cycle of latency.

Example:

ADDPS XMM0, XMM1

PAND XMM0, XMM3

ADDPS XMM2, XMM0

The overall latency for the above calculation is 9 cycles:

- 3 cycles for each ADDPS instruction.

- 1 cycle for the PAND instruction.

- 1 cycle to bypass between the ADDPS floating-point domain to the PAND integer domain.

- 1 cycle to move the data from the PAND integer to the second floating-point ADDPS domain.

To avoid this penalty, you should organize code to minimize domain changes. Sometimes you cannot avoid bypasses.

Account for bypass cycles when counting the overall latency of your code. If your calculation is latency- bound, you can execute more instructions in parallel or break dependency chains to reduce total latency.

Code that has many bypass domains and is completely latency-bound may run slower on the Intel Core microarchitecture than it did on previous microarchitectures.

3.5.2.4 Partial Register Stalls¶

General purpose registers can be accessed in granularities of bytes, words, doublewords; 64-bit mode also supports quadword granularity. Referencing a portion of a register is referred to as a partial register reference.

A partial register stall happens when an instruction refers to a register, portions of which were previously modified by other instructions. For example, partial register stalls occurs with a read to AX while previous instructions stored AL and AH, or a read to EAX while previous instruction modified AX.

The delay of a partial register stall is small in processors based on Intel Core and NetBurst microarchitectures, and in Pentium M processor (with CPUID signature family 6, model 13), Intel Core Solo, and Intel Core Duo processors. Pentium M processors (CPUID signature with family 6, model 9) and the P6 family incur a large penalty.

Note that in Intel 64 architecture, an update to the lower 32 bits of a 64 bit integer register is architecturally defined to zero extend the upper 32 bits. While this action may be logically viewed as a 32 bit update, it is really a 64 bit update (and therefore does not cause a partial stall).

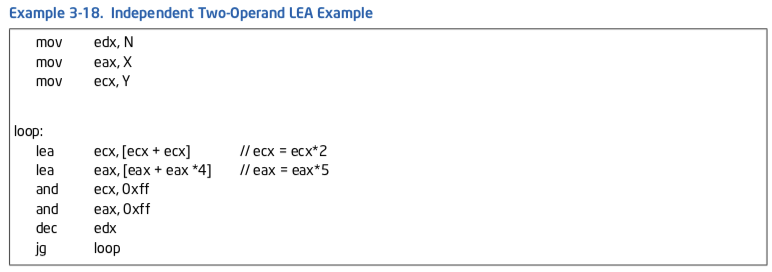

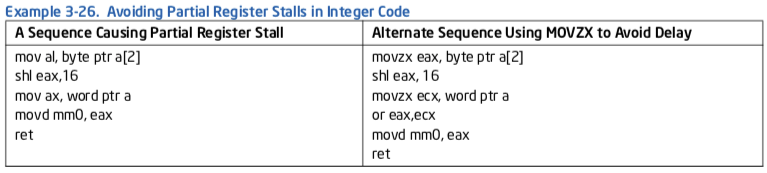

Referencing partial registers frequently produces code sequences with either false or real dependencies. Example 3-18 demonstrates a series of false and real dependencies caused by referencing partial regis- ters.

If instructions 4 and 6 (in Example 3-18) are changed to use a movzx instruction instead of a mov, then the dependences of instruction 4 on 2 (and transitively 1 before it), and instruction 6 on 5 are broken. This creates two independent chains of computation instead of one serial one.

Example 3-26 illustrates the use of MOVZX to avoid a partial register stall when packing three byte values into a register.

Starting with Intel microarchitecture code name Sandy Bridge and all subsequent generations of Intel Core microarchitecture, partial register access is handled in hardware by inserting a micro-op that merges the partial register with the full register in the following cases:

After a write to one of the registers AH, BH, CH or DH and before a following read of the 2-, 4- or 8- byte form of the same register. In these cases a merge micro-op is inserted. The insertion consumes a full allocation cycle in which other micro-ops cannot be allocated.

After a micro-op with a destination register of 1 or 2 bytes, which is not a source of the instruction (or the register’s bigger form), and before a following read of a 2-,4- or 8-byte form of the same register. In these cases the merge micro-op is part of the flow. For example:

MOV AX,[BX]

When you want to load from memory to a partial register, consider using MOVZX or MOVSX to avoid the additional merge micro-op penalty.

LEA AX, [BX+CX]

For optimal performance, use of zero idioms, before the use of the register, eliminates the need for partial register merge micro-ops.

3.5.2.5 Partial XMM Register Stalls¶

Partial register stalls can also apply to XMM registers. The following SSE and SSE2 instructions update only part of the destination register:

MOVL/HPD XMM, MEM64

MOVL/HPS XMM, MEM32

MOVSS/SD between registers

Using these instructions creates a dependency chain between the unmodified part of the register and the modified part of the register. This dependency chain can cause performance loss.

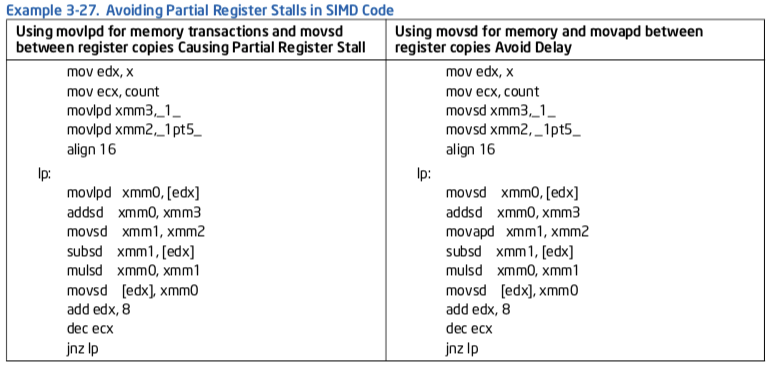

Example 3-27 illustrates the use of MOVZX to avoid a partial register stall when packing three byte values into a register.

Follow these recommendations to avoid stalls from partial updates to XMM registers:

Avoid using instructions which update only part of the XMM register.

If a 64-bit load is needed, use the MOVSD or MOVQ instruction.

If 2 64-bit loads are required to the same register from non continuous locations, use MOVSD/MOVHPD instead of MOVLPD/MOVHPD.

When copying the XMM register, use the following instructions for full register copy, even if you only want to copy some of the source register data:

MOVAPS MOVAPD MOVDQA

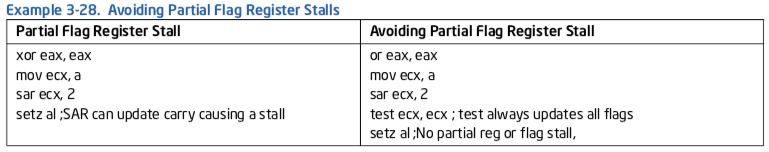

3.5.2.6 Partial Flag Register Stalls¶

A “partial flag register stall” occurs when an instruction modifies a part of the flag register and the following instruction is dependent on the outcome of the flags. This happens most often with shift instructions (SAR, SAL, SHR, SHL). The flags are not modified in the case of a zero shift count, but the shift count is usually known only at execution time. The front end stalls until the instruction is retired.

Other instructions that can modify some part of the flag register include CMPXCHG8B, various rotate instructions, STC, and STD. An example of assembly with a partial flag register stall and alternative code without the stall is shown in Example 3-28.

In processors based on Intel Core microarchitecture, shift immediate by 1 is handled by special hardware such that it does not experience partial flag stall.

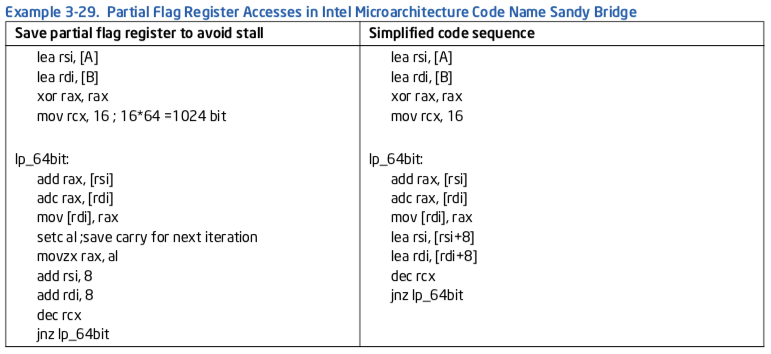

In Intel microarchitecture code name Sandy Bridge, the cost of partial flag access is replaced by the insertion of a micro-op instead of a stall. However, it is still recommended to use less of instructions that write only to some of the flags (such as INC, DEC, SET CL) before instructions that can write flags condi- tionally (such as SHIFT CL).

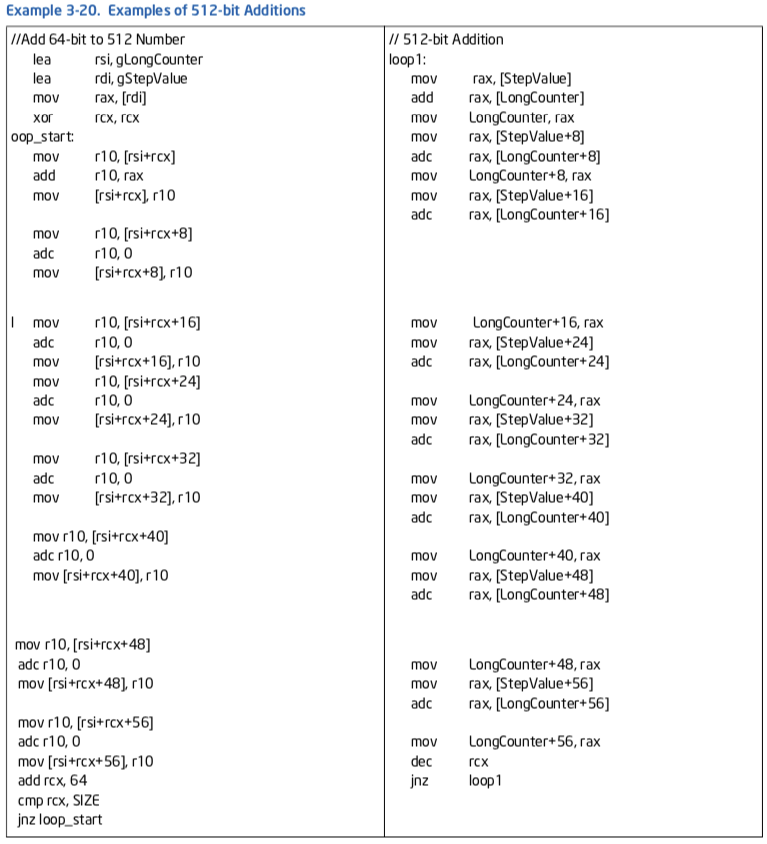

Example 3-29 compares two techniques to implement the addition of very large integers (e.g. 1024 bits). The alternative sequence on the right side of Example 3-29 will be faster than the left side on Intel microarchitecture code name Sandy Bridge, but it will experience partial flag stalls on prior microarchi- tectures.

3.5.2.7 Floating-Point/SIMD Operands¶

Moves that write a portion of a register can introduce unwanted dependences. The MOVSD REG, REG instruction writes only the bottom 64 bits of a register, not all 128 bits. This introduces a dependence on the preceding instruction that produces the upper 64 bits (even if those bits are not longer wanted). The dependence inhibits register renaming, and thereby reduces parallelism.

Use MOVAPD as an alternative; it writes all 128 bits. Even though this instruction has a longer latency, the μops for MOVAPD use a different execution port and this port is more likely to be free. The change can impact performance. There may be exceptional cases where the latency matters more than the depen- dence or the execution port.

Assembly/Compiler Coding Rule 42. (M impact, ML generality) Avoid introducing dependences with partial floating-point register writes, e.g. from the MOVSD XMMREG1, XMMREG2 instruction. Use the MOVAPD XMMREG1, XMMREG2 instruction instead.

The MOVSD XMMREG, MEM instruction writes all 128 bits and breaks a dependence.

The MOVUPD from memory instruction performs two 64-bit loads, but requires additional μops to adjust the address and combine the loads into a single register. This same functionality can be obtained using MOVSD XMMREG1, MEM; MOVSD XMMREG2, MEM+8; UNPCKLPD XMMREG1, XMMREG2, which uses fewer μops and can be packed into the trace cache more effectively. The latter alternative has been found to provide a several percent performance improvement in some cases. Its encoding requires more instruction bytes, but this is seldom an issue for the Pentium 4 processor. The store version of MOVUPD is complex and slow, so much so that the sequence with two MOVSD and a UNPCKHPD should always be used.

Assembly/Compiler Coding Rule 43. (ML impact, L generality) Instead of using MOVUPD XMMREG1, MEM for a unaligned 128-bit load, use MOVSD XMMREG1, MEM; MOVSD XMMREG2, MEM+8; UNPCKLPD XMMREG1, XMMREG2. If the additional register is not available, then use MOVSD XMMREG1, MEM; MOVHPD XMMREG1, MEM+8.

Assembly/Compiler Coding Rule 44. (M impact, ML generality) Instead of using MOVUPD MEM, XMMREG1 for a store, use MOVSD MEM, XMMREG1; UNPCKHPD XMMREG1, XMMREG1; MOVSD MEM+8, XMMREG1 instead.

3.5.3 矢量化¶

This section provides a brief summary of optimization issues related to vectorization. There is more detail in the chapters that follow.

Vectorization is a program transformation that allows special hardware to perform the same operation on multiple data elements at the same time. Successive processor generations have provided vector support through the MMX technology, Streaming SIMD Extensions (SSE), Streaming SIMD Extensions 2 (SSE2), Streaming SIMD Extensions 3 (SSE3) and Supplemental Streaming SIMD Extensions 3 (SSSE3).

Vectorization is a special case of SIMD, a term defined in Flynn’s architecture taxonomy to denote a single instruction stream capable of operating on multiple data elements in parallel. The number of elements which can be operated on in parallel range from four single-precision floating-point data elements in Streaming SIMD Extensions and two double-precision floating-point data elements in Streaming SIMD Extensions 2 to sixteen byte operations in a 128-bit register in Streaming SIMD Extensions 2. Thus, vector length ranges from 2 to 16, depending on the instruction extensions used and on the data type.

Intel C++编译器以三种方式支持矢量化:

- 可以在不需要用户干预的情况下生成SIMD代码

- 用户可以插入pragma语句以帮助编译器将代码矢量化

- 用户可以通过使用intrinsics显式地编写SIMD代码

To help enable the compiler to generate SIMD code, avoid global pointers and global variables. These issues may be less troublesome if all modules are compiled simultaneously, and whole-program optimization is used.

User/Source Coding Rule 2. (H impact, M generality) 尽可能使用最小的浮点数或SIMD数据类型, 以通过SIMD矢量达到更高的并行度. 例如, 在可能的时候使用单精度而不是双精度.

User/Source Coding Rule 3. (M impact, ML generality) Arrange the nesting of loops so that the innermost nesting level is free of inter-iteration dependencies. Especially avoid the case where the store of data in an earlier iteration happens lexically after the load of that data in a future iteration, something which is called a lexically backward dependence.

The integer part of the SIMD instruction set extensions cover 8-bit,16-bit and 32-bit operands. Not all SIMD operations are supported for 32 bits, meaning that some source code will not be able to be vectorized at all unless smaller operands are used.

User/Source Coding Rule 4. (M impact, ML generality) 避免在循环内部使用条件分支, 考虑使用SSE指令消除分支.

User/Source Coding Rule 5. (M impact, ML generality) 简化迭代变量表达式.

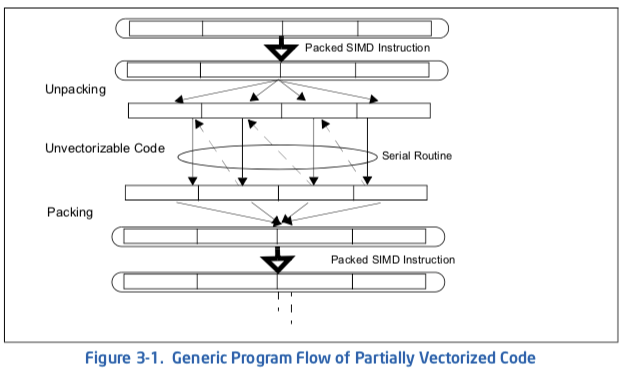

3.5.4 Optimization of Partially Vectorizable Code¶

Frequently, a program contains a mixture of vectorizable code and some routines that are non-vectorizable. A common situation of partially vectorizable code involves a loop structure which include mixtures of vectorized code and unvectorizable code. This situation is depicted in Figure 3-1.

It generally consists of five stages within the loop:

- Prolog.

- Unpacking vectorized data structure into individual elements.

- Calling a non-vectorizable routine to process each element serially.

- Packing individual result into vectorized data structure.

- Epilog.

This section discusses techniques that can reduce the cost and bottleneck associated with the packing/unpacking stages in these partially vectorize code.

Example 3-30 shows a reference code template that is representative of partially vectorizable coding situations that also experience performance issues. The unvectorizable portion of code is represented generically by a sequence of calling a serial function named “foo” multiple times. This generic example is referred to as “shuffle with store forwarding”, because the problem generally involves an unpacking stage that shuffles data elements between register and memory, followed by a packing stage that can experience store forwarding issue.

There are more than one useful techniques that can reduce the store-forwarding bottleneck between the serialized portion and the packing stage. The following sub-sections presents alternate techniques to deal with the packing, unpacking, and parameter passing to serialized function calls.

Example 3-30. Reference Code Template for Partially Vectorizable Program

; Prolog /////////////////////////////// push ebp

mov ebp, esp

; Unpacking //////////////////////////// sub ebp, 32

and ebp, 0xfffffff0

movaps [ebp], xmm0

; Serial operations on components /////// sub ebp, 4

mov eax, [ebp+4] mov [ebp], eax

call foo

mov [ebp+16+4], eax

mov eax, [ebp+8]

mov [ebp], eax

call foo

mov [ebp+16+4+4], eax

mov eax, [ebp+12]

mov [ebp], eax

call foo

mov [ebp+16+8+4], eax

mov eax, [ebp+12+4] mov [ebp], eax

call foo

mov [ebp+16+12+4], eax

; Packing /////////////////////////////// movaps xmm0, [ebp+16+4]

; Epilog //////////////////////////////// pop ebp

ret

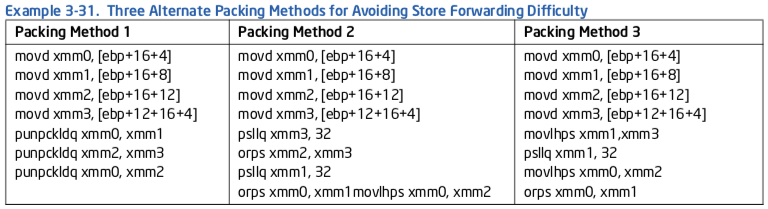

3.5.4.1 Alternate Packing Techniques¶

The packing method implemented in the reference code of Example 3-30 will experience delay as it assembles 4 doubleword result from memory into an XMM register due to store-forwarding restrictions.

Three alternate techniques for packing, using different SIMD instruction to assemble contents in XMM registers are shown in Example 3-31. All three techniques avoid store-forwarding delay by satisfying the restrictions on data sizes between a preceding store and subsequent load operations.

3.5.4.2 Simplifying Result Passing¶

In Example 3-30, individual results were passed to the packing stage by storing to contiguous memory locations. Instead of using memory spills to pass four results, result passing may be accomplished by using either one or more registers. Using registers to simplify result passing and reduce memory spills can improve performance by varying degrees depending on the register pressure at runtime.

Example 3-32 shows the coding sequence that uses four extra XMM registers to reduce all memory spills of passing results back to the parent routine. However, software must observe the following conditions when using this technique:

- There is no register shortage.

- If the loop does not have many stores or loads but has many computations, this technique does not help performance. This technique adds work to the computational units, while the store and loads ports are idle.

Example 3-32. Using Four Registers to Reduce Memory Spills and Simplify Result Passing

mov eax, [ebp+4]

mov [ebp], eax

call foo

movd xmm0, eax

mov eax, [ebp+8]

mov [ebp], eax

call foo

movd xmm1, eax

mov eax, [ebp+12]

mov [ebp], eax

call foo

movd xmm2, eax

mov eax, [ebp+12+4]

mov [ebp], eax

call foo

movd xmm3, eax

3.5.4.3 Stack Optimization¶

In Example 3-30, an input parameter was copied in turn onto the stack and passed to the non-vectorizable routine for processing. The parameter passing from consecutive memory locations can be simplified by a technique shown in Example 3-33.

Example 3-33. Stack Optimization Technique to Simplify Parameter Passing

call foo

mov [ebp+16], eax

add ebp, 4

call foo

mov [ebp+16], eax

add ebp, 4

call foo

mov [ebp+16], eax

add ebp, 4

call foo

Stack Optimization can only be used when:

- The serial operations are function calls. The function “foo” is declared as: INT FOO(INT A). The parameter is passed on the stack.

- The order of operation on the components is from last to first.

Note the call to FOO and the advance of EDP when passing the vector elements to FOO one by one from last to first.

3.5.4.4 Tuning Considerations¶

Tuning considerations for situations represented by looping of Example 3-30 include:

Applying one of more of the following combinations:

- Choose an alternate packing technique.

- Consider a technique to simply result-passing.

- Consider the stack optimization technique to simplify parameter passing.

Minimizing the average number of cycles to execute one iteration of the loop.

Minimizing the per-iteration cost of the unpacking and packing operations.

The speed improvement by using the techniques discussed in this section will vary, depending on the choice of combinations implemented and characteristics of the non-vectorizable routine. For example, if the routine “foo” is short (representative of tight, short loops), the per-iteration cost of unpacking/packing tend to be smaller than situations where the non-vectorizable code contain longer operation or many dependencies. This is because many iterations of short, tight loop can be in flight in the execution core, so the per-iteration cost of packing and unpacking is only partially exposed and appear to cause very little performance degradation.

Evaluation of the per-iteration cost of packing/unpacking should be carried out in a methodical manner over a selected number of test cases, where each case may implement some combination of the tech- niques discussed in this section. The per-iteration cost can be estimated by:

- Evaluating the average cycles to execute one iteration of the test case.

- Evaluating the average cycles to execute one iteration of a base line loop sequence of non-vectorizable code.

Example 3-34 shows the base line code sequence that can be used to estimate the average cost of a loop that executes non-vectorizable routines.

Example 3-34. Base Line Code Sequence to Estimate Loop Overhead

push ebp

mov ebp, esp

sub ebp, 4

mov [ebp], edi

call foo

mov [ebp], edi

call foo

mov [ebp], edi

call foo

mov [ebp], edi

call foo

add ebp, 4

pop ebp

ret

The average per-iteration cost of packing/unpacking can be derived from measuring the execution times of a large number of iterations by:

((Cycles to run TestCase) - (Cycles to run equivalent baseline sequence) ) / (Iteration count).

For example, using a simple function that returns an input parameter (representative of tight, short loops), the per-iteration cost of packing/unpacking may range from slightly more than 7 cycles (the shuffle with store forwarding case, Example 3-30) to ~0.9 cycles (accomplished by several test cases). Across 27 test cases (consisting of one of the alternate packing methods, no result-simplification/simplification of either 1 or 4 results, no stack optimization or with stack optimization), the average per-iteration cost of packing/unpacking is about 1.7 cycles.

Generally speaking, packing method 2 and 3 (see Example 3-31) tend to be more robust than packing method 1; the optimal choice of simplifying 1 or 4 results will be affected by register pressure of the runtime and other relevant microarchitectural conditions.

Note that the numeric discussion of per-iteration cost of packing/packing is illustrative only. It will vary with test cases using a different base line code sequence and will generally increase if the non-vectorizable routine requires longer time to execute because the number of loop iterations that can reside in flight in the execution core decreases.

3.6 优化内存访问¶

This section discusses guidelines for optimizing code and data memory accesses. The most important recommendations are:

- Execute load and store operations within available execution bandwidth.

- Enable forward progress of speculative execution. (???)

- Enable store forwarding to proceed. (???)

- 对齐数据, 注意数据布局和栈对齐

- Place code and data on separate pages.

- 增强数据局部性

- 使用预取和缓存控制指令

- Enhance code locality and align branch targets.

- Take advantage of write combining.

Alignment and forwarding problems are among the most common sources of large delays on processors based on Intel NetBurst microarchitecture.

3.6.1 Load and Store Execution Bandwidth¶

Typically, loads and stores are the most frequent operations in a workload, up to 40% of the instructions in a workload carrying load or store intent are not uncommon. Each generation of microarchitecture provides multiple buffers to support executing load and store operations while there are instructions in flight.

Software can maximize memory performance by not exceeding the issue or buffering limitations of the machine. In the Intel Core microarchitecture, only 20 stores and 32 loads may be in flight at once. In Intel microarchitecture code name Nehalem, there are 32 store buffers and 48 load buffers. Since only one load can issue per cycle, algorithms which operate on two arrays are constrained to one operation every other cycle unless you use programming tricks to reduce the amount of memory usage.

Intel Core Duo and Intel Core Solo processors have less buffers. Nevertheless the general heuristic applies to all of them.

3.6.1.1 Make Use of Load Bandwidth in Intel Microarchitecture Code Name Sandy Bridge¶

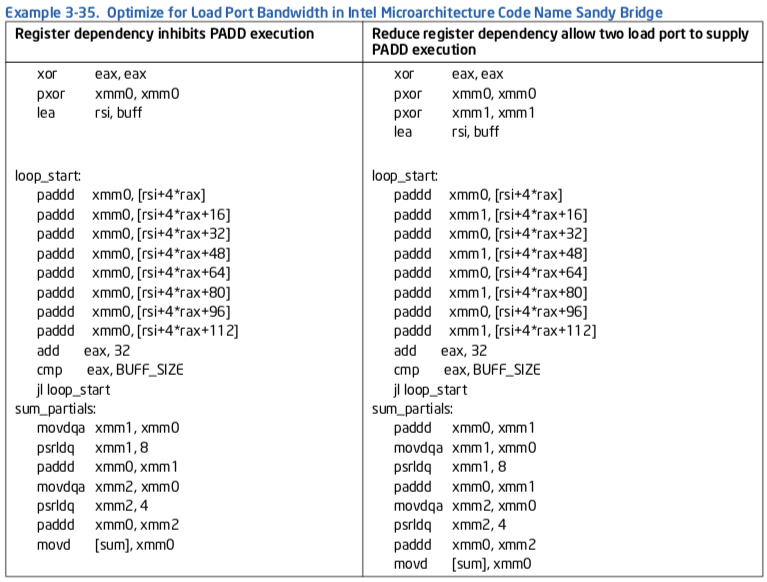

While prior microarchitecture has one load port (port 2), Intel microarchitecture code name Sandy Bridge can load from port 2 and port 3. Thus two load operations can be performed every cycle and doubling the load throughput of the code. This improves code that reads a lot of data and does not need to write out results to memory very often (Port 3 also handles store-address operation). To exploit this bandwidth, the data has to stay in the L1 data cache or it should be accessed sequentially, enabling the hardware prefetchers to bring the data to the L1 data cache in time.

Consider the following C code example of adding all the elements of an array:

int buff[BUFF_SIZE];

int sum = 0;

for (i=0; i<BUFF_SIZE; i++) {

sum += buff[i];

}

Alternative 1 is the assembly code generated by the Intel compiler for this C code, using the optimization flag for Intel microarchitecture code name Nehalem. The compiler vectorizes execution using Intel SSE instructions. In this code, each ADD operation uses the result of the previous ADD operation. This limits the throughput to one load and ADD operation per cycle. Alternative 2 is optimized for Intel microarchitecture code name Sandy Bridge by enabling it to use the additional load bandwidth. The code removes the dependency among ADD operations, by using two registers to sum the array values. Two load and two ADD operations can be executed every cycle.

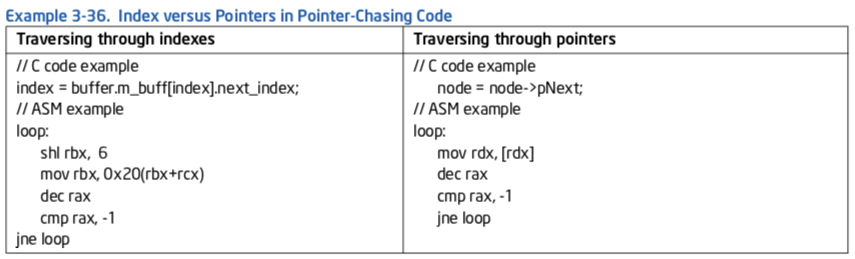

3.6.1.2 L1D Cache Latency in Intel Microarchitecture Code Name Sandy Bridge¶

Load latency from L1D cache may vary (see Table 2-21). The best case if 4 cycles, which apply to load operations to general purpose registers using one of the following:

- One register.

- A base register plus an offset that is smaller than 2048

Consider the pointer-chasing code example in Example 3-36.

The left side implements pointer chasing via traversing an index. Compiler then generates the code shown below addressing memory using base+index with an offset. The right side shows compiler generated code from pointer de-referencing code and uses only a base register.

zzq注解 看起来pNext应该是node结构体的第1个成员

The code on the right side is faster than the left side across Intel microarchitecture code name Sandy Bridge and prior microarchitecture. However the code that traverses index will be slower on Intel microarchitecture code name Sandy Bridge relative to prior microarchitecture.

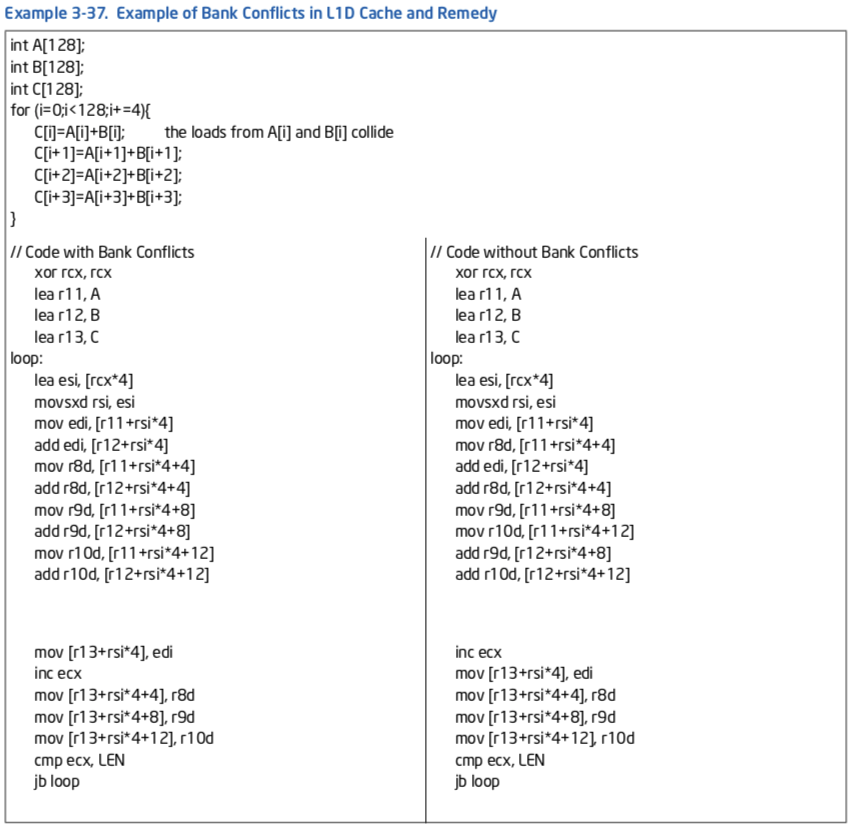

3.6.1.3 Handling L1D Cache Bank Conflict¶

In Intel microarchitecture code name Sandy Bridge, the internal organization of the L1D cache may manifest a situation when two load micro-ops whose addresses have a bank conflict. When a bank conflict is present between two load operations, the more recent one will be delayed until the conflict is resolved. A bank conflict happens when two simultaneous load operations have the same bit 2-5 of their linear address but they are not from the same set in the cache (bits 6 - 12).

Bank conflicts should be handled only if the code is bound by load bandwidth. Some bank conflicts do not cause any performance degradation since they are hidden by other performance limiters. Eliminating such bank conflicts does not improve performance.

The following example demonstrates bank conflict and how to modify the code and avoid them. It uses two source arrays with a size that is a multiple of cache line size. When loading an element from A and the counterpart element from B the elements have the same offset in their cache lines and therefore a bank conflict may happen.

With the Haswell microarchitecture, the L1 DCache bank conflict issue does not apply.

3.6.2 Minimize Register Spills¶

When a piece of code has more live variables than the processor can keep in general purpose registers, a common method is to hold some of the variables in memory. This method is called register spill. The effect of L1D cache latency can negatively affect the performance of this code. The effect can be more pronounced if the address of register spills uses the slower addressing modes.

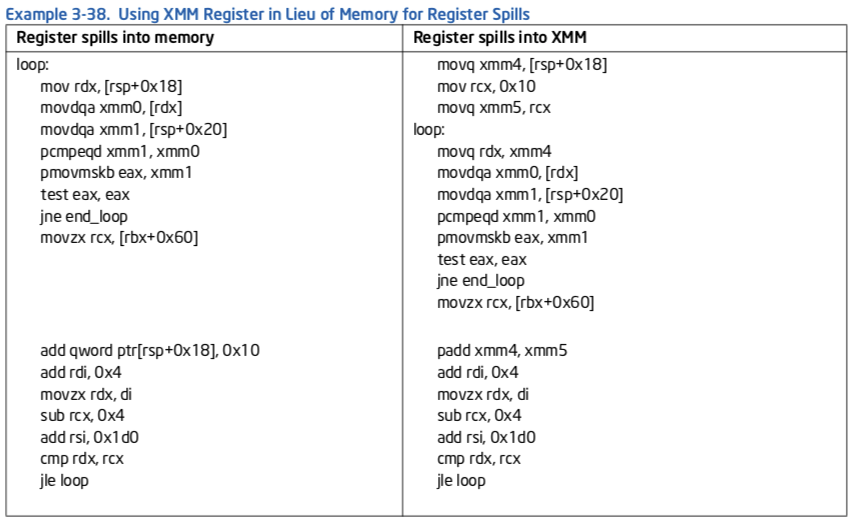

One option is to spill general purpose registers to XMM registers. This method is likely to improve performance also on previous processor generations. The following example shows how to spill a register to an XMM register rather than to memory.

3.6.3 Enhance Speculative Execution and Memory Disambiguation¶

Prior to Intel Core microarchitecture, when code contains both stores and loads, the loads cannot be issued before the address of the store is resolved. This rule ensures correct handling of load dependencies on preceding stores.

The Intel Core microarchitecture contains a mechanism that allows some loads to be issued early speculatively. The processor later checks if the load address overlaps with a store. If the addresses do overlap, then the processor re-executes the instructions.

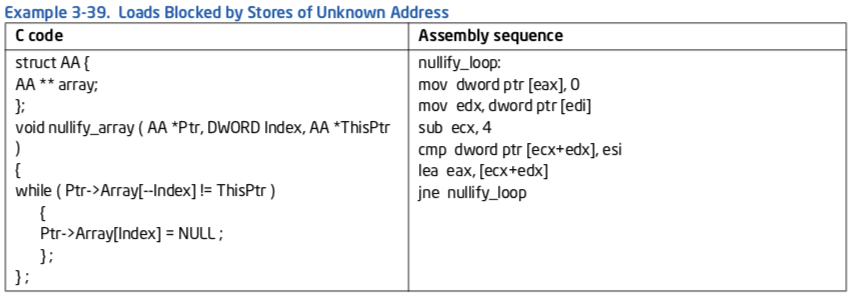

Example 3-39 illustrates a situation that the compiler cannot be sure that “Ptr->Array” does not change during the loop. Therefore, the compiler cannot keep “Ptr->Array” in a register as an invariant and must read it again in every iteration. Although this situation can be fixed in software by a rewriting the code to require the address of the pointer is invariant, memory disambiguation provides performance gain without rewriting the code.

3.6.4 Alignment¶

数据对齐与所有类型的变量有关:

- 动态分配的变量

- 数据结构的成员

- 全局或局部变量

- 在栈上传递的参数

未对齐的数据访问会导致严重的性能损失, 对cache line分割尤其如此. Pentium 4及之后的处理器的cache line大小是64字节.

访问未在64字节边界对齐的数据会产生两次内存访问, 并需要执行多条微指令. 跨64字节边界的访问很可能会导致大的性能损失, 在拥有更长流水线上的处理器上每个stall的开销更大.

Double-precision floating-point operands that are eight-byte aligned have better performance than operands that are not eight-byte aligned, since they are less likely to incur penalties for cache and MOB splits. Floating-point operation on a memory operands require that the operand be loaded from memory. This incurs an additional μop, which can have a minor negative impact on front end bandwidth. Additionally, memory operands may cause a data cache miss, causing a penalty.

Assembly/Compiler Coding Rule 45. (H impact, H generality) Align data on natural operand size address boundaries. If the data will be accessed with vector instruction loads and stores, align the data on 16-byte boundaries.

For best performance, align data as follows:

- Align 8-bit data at any address.

- Align 16-bit data to be contained within an aligned 4-byte word.

- Align 32-bit data so that its base address is a multiple of four.

- Align 64-bit data so that its base address is a multiple of eight.

- Align 80-bit data so that its base address is a multiple of sixteen.

- Align 128-bit data so that its base address is a multiple of sixteen.

A 64-byte or greater data structure or array should be aligned so that its base address is a multiple of 64. Sorting data in decreasing size order is one heuristic for assisting with natural alignment. As long as 16 byte boundaries (and cache lines) are never crossed, natural alignment is not strictly necessary (though it is an easy way to enforce this).

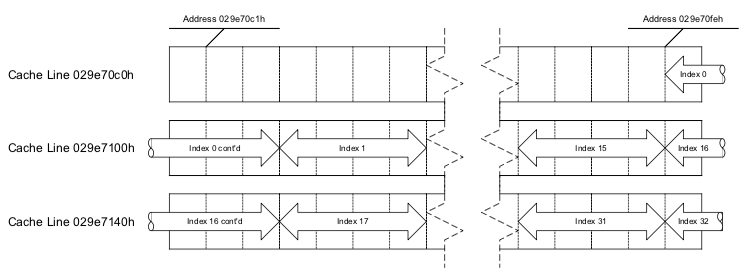

Example 3-40 shows the type of code that can cause a cache line split. The code loads the addresses of two DWORD arrays. 029E70FEH is not a 4-byte-aligned address, so a 4-byte access at this address will get 2 bytes from the cache line this address is contained in, and 2 bytes from the cache line that starts at 029E700H. On processors with 64-byte cache lines, a similar cache line split will occur every 8 iterations.

Example 3-40. Code That Causes Cache Line Split

mov esi, 029e70feh

mov edi, 05be5260h

Blockmove:

mov eax, DWORD PTR [esi]

mov ebx, DWORD PTR [esi+4]

mov DWORD PTR [edi], eax

mov DWORD PTR [edi+4], ebx

add esi, 8

add edi, 8

sub edx, 1

jnz Blockmove

Figure 3-2 illustrates the situation of accessing a data element that span across cache line boundaries.

Alignment of code is less important for processors based on Intel NetBurst microarchitecture. Alignment of branch targets to maximize bandwidth of fetching cached instructions is an issue only when not executing out of the trace cache.

Alignment of code can be an issue for the Pentium M, Intel Core Duo and Intel Core 2 Duo processors. Alignment of branch targets will improve decoder throughput.

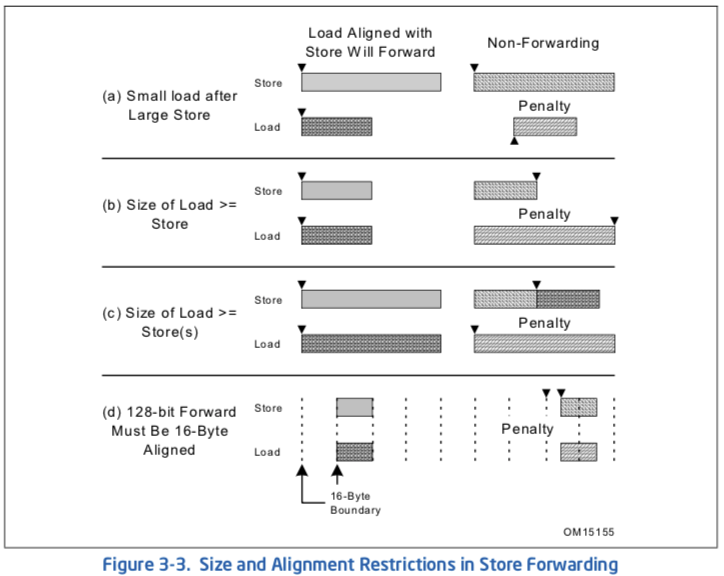

3.6.5 Store Forwarding¶

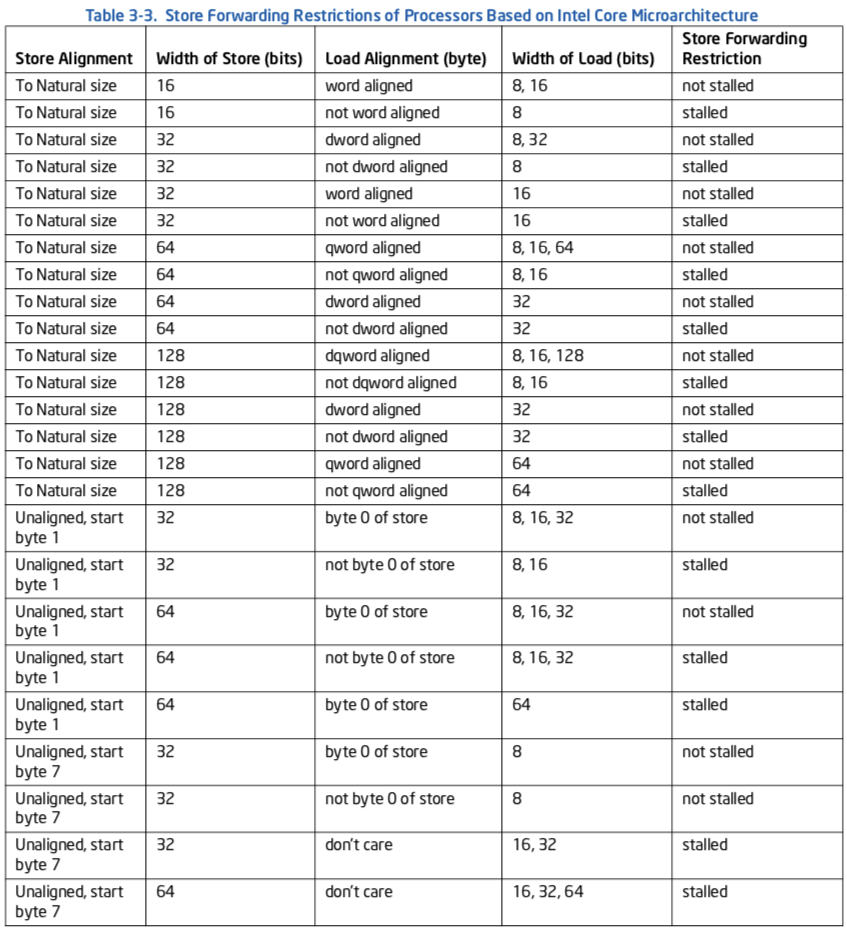

The processor’s memory system only sends stores to memory (including cache) after store retirement. However, store data can be forwarded from a store to a subsequent load from the same address to give a much shorter store-load latency.